Readers

Choose from cloud, file or relational services. Data can be collated from more than one source using multiple reader nodes.

- Click to edit

- Right-click to remove

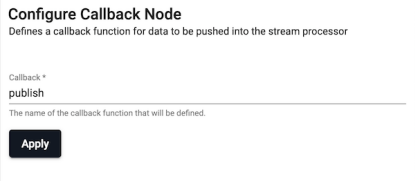

Callback

Defines a callback in the q global namespace.

- Callback

- The name of the callback function to be defined.

Expression

Runs a kdb+ expression

- Expr

- q expression.

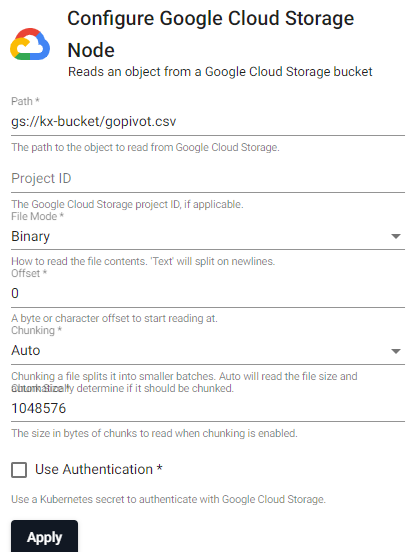

Google Cloud Storage

Read data from Google Cloud Storage

| item | description |

|---|---|

| Path | The path of an object to read from Google Storage, e.g. gs://bucketname/filename.csv |

| Project ID | Google Cloud Storage Project ID, if applicable. |

| File Mode | The file read mode. Setting the file mode to binary will read content as a byte vector. When reading a file as text, data will be read as strings and be split on newlines. Each string vector represents a single line. |

| Offset | A byte or character offset to start reading at. Set at 0. |

| Chunking | Splits file into smaller batches. Auto will read file size and determine if it should be chunked. |

| Chunk Size | File size of chunks when chunking is enabled. |

| Use Authentication | Enable Kubernetes secret authentication. |

| Kubernetes Secret | The name of a Kubernetes secret to authenticate with Google Cloud Storage. |

Google Cloud Storage Authentication

Google Cloud Storage Authentication uses kurl for credential validation.

Environment variable authentication

To setup authentication using an environment variable, set GOOGLE_STORAGE_TOKEN in Google's gcloud CLI

gcloud auth print-access tokenKubernetes secrets for authentication

A Kubernetes secret may be used to authenticate files. This secret needs to be created in the same namespace as the KX Insights Platform install. The name of that secret is then used in the Kubernetes Secret field when configuring the reader.

To create a Kubernetes secret with Google Cloud credentials:

kubectl create secret generic SECRET_NAME DATA SECRET_NAME is the name of the secret used in the reader. DATA is the data to add to the secret; for example, a path to a directory containing one or more configuration files using --from-file or -from-env-file flag, or key-value pairs each specified using --from-literal flags.

HTTP

Read data from an URL

| item | description |

|---|---|

| URL | The URL to send the request to. |

| Method | How the HTTP request is handled; for example GET or POST; default to GET. |

| Body | The payload of the HTTP request. |

| Header | A map of header fields to send as part of the request. |

| onResponse | After a response, allow the response to be preprocessed or to trigger another request. Returning :: will process the return from the original request immediately. A return of a string will issue another request with the return value as the URL. A return of a dictionary allows for any of the operator parameters to be reset and a new HTTP request issued. A special 'response' key can be used in the return dictionary to change the payload of the response. If the response key is set to ::, no data is pushed into the pipeline. |

| followRedirects | When checked, any redirects will automatically be followed up to the MaxRedirects value. |

| MaxRedirects | The maximum number of redirects to follow before reporting an error. |

| MaxRetryAttempts | The maximum number of times to retry a request that fails due to a timeout. |

| Timeout | The duration in milliseconds to wait for a request to complete before reporting an error. |

| Tenant | The request tenant to use for providing request authentication details. |

| Insecure | When checked, any unverified server SSL/TLS certificates will be considered trustworthy |

| Binary | When checked, payload will be returned as binary data, otherwise payload is treated as text data. |

| Sync | When checked, a synchronous request will block the process until the request is completed. Default is an asynchronous request. |

| RejectErrors | When checked, a non-successful response will generate an error and stop the pipeline. |

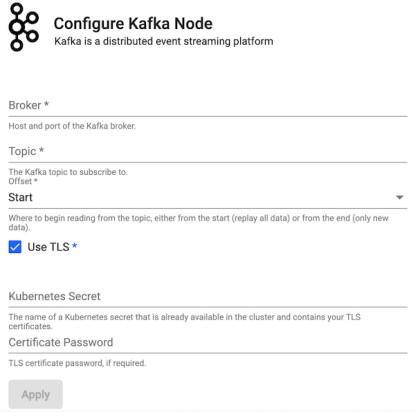

Kafka

Consume data from a Kafka topic. Publish data on a Kafka topic. Kafka is a distributed event streaming platform. A Kafka writer will publish data to a Kafka broker which can then be consumed by any downstream listeners. All data published to Kafka must be encoded as either strings or serialized as bytes. If data reaches the Kafka writer that is not encoded, it is converted to q IPC serialization representation.

| item | description |

|---|---|

| Brokers | Location of the Kafka broker as host:port. |

| Topic | The name of the Kafka topic. |

| Offset | Read data from the Start of the Topic; i.e. all data, or the End; i.e. new data only. |

| Use TLS | Enable TLS. |

| Kubernetes Secret | The name of a Kubernetes secret that is already available in the cluster and contains your TLS certificates. |

| Certificate Password | TLS certificate password, if required. |

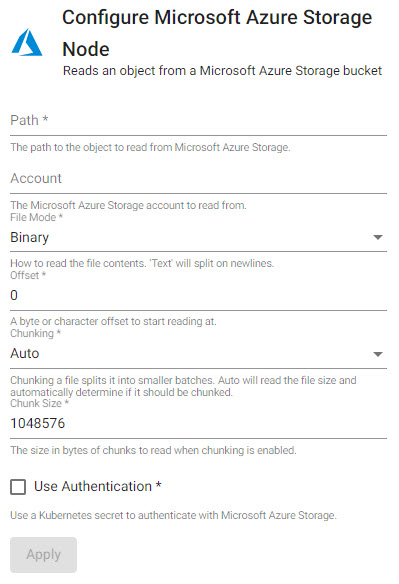

Microsoft Azure Storage

Read from data stored in Microsoft Azure Storage. A file will be chunked if it's sufficiently large (greater than a few megabytes).

| item | description |

|---|---|

| Path | The source data path to read from Azure Storage; e.g. ms://bucket/numbers.txt |

| Account | The name of the Azure Storage Account hosting the data; $AZURE_STORAGE_ACCOUNT |

| Mode | Data is read as either binary or text. binary data will read content as a byte vector, text data will be read as strings and will split on newlines. Each string vector represents a single line. Default is binary. |

| Offset | Byte or character offset to start reading data |

| Chunking | Splits file into smaller batches; Auto will read file size to automatically determine if file should be chunked. Chunking can be enabled, disabled or auto. |

| Chunk Size | The size in bytes of chunks to read when chunking is enabled. |

| Use Authentication | Enable Kubernetes secret authentication. |

| Kubernetes Secret | The name of a Kubernetes secret that is already available in the cluster and contains your TLS certificates. |

Microsoft Azure Authentication

Microsoft Azure Storage Authentication uses kurl for credential validation.

Environment variable Authentication

To set up authentication with an environment variable, set AZURE_STORAGE_ACCOUNT to the name of the storage account to read from, and AZURE_STORAGE_SHARED_KEY to the one of the keys of the account. Additional details on shared keys usage available here.

Kubernetes secrets for authentication

A Kubernetes secret may be used to authenticate files. This secret needs to be created in the same namespace as the KX Insights Platform install. The name of that secret is then used in the Kubernetes Secret field when configuring the reader.

To create a Kubernetes secret with Azure credentials:

kubectl create secret generic secret-name --from-literal=azurestorageaccountname=$AKS_PERS_STORAGE_ACCOUNT_NAME --from-literal=azurestorageaccountkey=$STORAGE_KEY KX Insights Stream

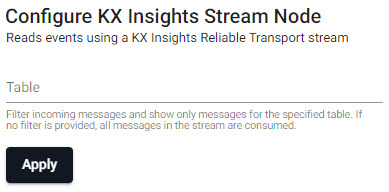

Reads data from a KX Insights Stream.

| item | description |

|---|---|

| Table | Filter incoming messages to show only messages for the specified table. If no filter is provided, all messages in the stream are consumed |

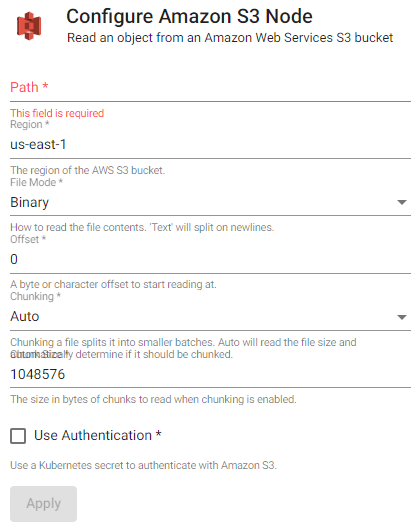

Amazon S3

Read from data stored in Amazon Simple Storage Service.

| item | description |

|---|---|

| Path | The source data path to read from S3, e.g., s3://bucket/file.csv. |

| Region | The region of the AWS S3 bucket. |

| File Mode | The file read mode. Setting the file mode to binary will read content as a byte vector. When reading a file as text, data will be read as strings and be split on newlines. Each string vector represents a single line. |

| Offset | A byte or character offset to start reading at. Set at 0. |

| Chunking | Splits file into smaller batches. Auto will read file size and determine if it should be chunked. |

| Chunk Size | File size of chunks when chunking is enabled. |

| Use Authentication | Enable Kubernetes secret authentication. |

| Kubernetes Secret | The name of a Kubernetes secret that is already available in the cluster and contains your TLS certificates. |

Amazon S3 Authentication

To access private buckets or files, a Kubernetes secret needs to be created that contains valid AWS credentials. This secret needs to be created in the same namespace as the KX Insights Platform install. The name of that secret is then used in the Kubernetes Secret field when configuring the reader.

To create a Kubernetes secret containing AWS credentials:

kubectl create secret generic --from-file=credentials=<path/to/.aws/credentials> <secret-name>Where <path/to/.aws/credentials> is the path to an AWS credentials file, and <secret-name> is the name of the Kubernetes secret to create.

Note that this only needs to be done once, and each subsequent usage of the Amazon S3 reader can re-use the same Kubernetes secret.

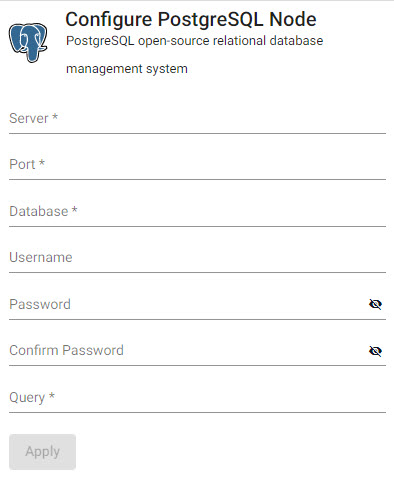

PostgreSQL and SQL Server

Reads data from an open-source relational or Microsoft SQL server database.

| item | description |

|---|---|

| Server | Address of the database to connect too; for example: postgresql.db.svc |

| Port | Connection Port |

| Database | Database name |

| Username | User access to database |

| Password | User password to database |

| Confirmed Password | Required authentication and confirmation |

| Query | SQL query; for example: select * from datasourcename |