Development in the Scratchpad using q

This section guides you through the execution of q code and APIs using the Scratchpad.

The q environment in the Scratchpad offers a unique, self-contained location, where you can assign variables and produce analyses that are visible only to you.

The following sections describe how to:

- Execute code using q in the Scratchpad

- Develop Stream Processor pipelines using the Stream Processor q API

- Debug UI pipelines

- Interact with custom code using Packages

- Develop machine learning workflows using the Machine Learning Core APIs

- Include Python in your q code using embedPy and the PyKX q API

For information on data visualization and console output in the Scratchpad see here.

If you are primarily developing in Python see here.

Execute q code

When executing code within the Scratchpad, keep the following points in mind:

- The Q language is highlighted within the Scratchpad to indicate you are using the q language version of the Scratchpad.

- Use Ctrl + Enter or Cmd + Enter, depending on OS, to execute the current line or selection. You can execute the current line without selecting it.

- Click Run Scratchpad to execute everything in the editor.

Namespaces (or contexts)

The current namespace can be changed by adding a line such as \d .myNamespace anywhere above the line you're executing.

This line does not need to be included in the selection you're executing.

As an example, running just the second line in the following code assigns 1 2 3 to .test.result.

The result variable on the last line is in the global context, and is undefined.

\d .test

result: 1 2 3

// The namespace remains ".test" on all subsequent lines until it is changed by another `\d` command

.test.result ~ 1 2 3

\d .

// This variable is in the global context, and is undefined

result

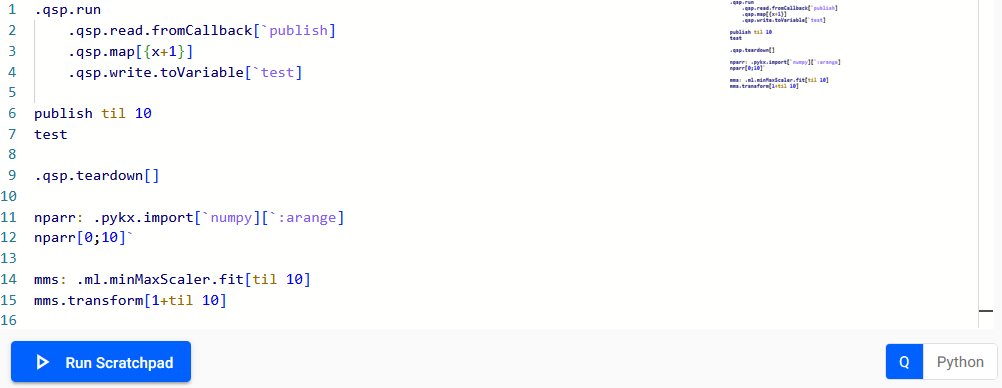

Develop Stream Processor pipelines

The Scratchpad can be used as a prototyping environment for Stream Processor pipelines, as it provides a process in which to easily publish batches to a pipeline, capture intermediate result, and step through your functions. Access to the pipeline API allows you to simulate production workflows and test code logic prior to moving development work to production environments. This is facilitated through use of the Stream Processor q API.

Pipeline(s) run in the Scratchpad are not listed under Pipelines on the Overview page, and must be managed from within the Scratchpad. They are run in the Scratchpad process, the same as when deployed using Quick Test.

For example, the following script creates a pipeline for enriching weather data. It contains an error that can easily be debugged in the Scratchpad.

-

Copy the following code to your Scratchpad to create the pipeline.

// Only one pipeline can be run at once, so `teardown` is called before running a new pipeline. // Because the scratchpad process hosting the pipeline is already running, there is no deployment step needed, just a call to .qsp.run .qsp.teardown[]; .qsp.run // While developing a pipeline, the fromCallback reader lets you send batches one at a time. // This creates a function called `publish` to accept incoming batches. .qsp.read.fromCallback[`publish] .qsp.decode.csv[([] time:`timestamp$(); temp:`float$(); humidity:`float$())] .qsp.map[{[op; md; data] data: update dewpoint: temp - .2 * (100 - humidity) from data; data: update heatIndex: .5 * temp + 61 + (1.2 * temp - 68) + humidity * .094 from data; state: .qsp.get[op; md]; state[`recordHigh]: max data[`heatIndex] , state`recordHigh; state[`recordLow]: min data[`heatIndex] , state`recordLow; .qsp.set[op; md; state]; : update recordHigh: state`recordHigh, recordLow: state`recordLow from data }; .qsp.use ``state!(::; `recordHigh`recordLow!(::; ::))] // The toVariable writer appends each batch to a variable in the current (scratchpad) process, // making it very convenient for debugging .qsp.write.toVariable[`out] // Send a batch to the pipeline publish "time,temp,humidity\n", "2024.07.01T12:00,81,74\n", "2024.07.01T12:00,82,70\n" -

This example is throwing the error

type - error in operator: map, so you can cache the parameters in the map node, then step through the code. -

First, update the map node to write the parameters to a global variable, then re-run the pipeline definition and call to publish.

.qsp.map[{[data] `op`md`data set' .test.cache; update dewpoint: temp - .2 * (100 - humidity) from data; update heatIndex: .5 * temp + 61 + (1.2 * tmp - 68) + humidity * .094 from data }] -

Next, run the following code to define the parameters while stepping through the map node.

`op`md`data set' .test.cache -

Run each line individually until you get the error on this line.

state[`recordHigh]: max data[`heatIndex] , state`recordHigh; -

Because recordHigh is a generic null, it can't be passed to max. Rewrite the initial state so the records start as negative and positive float infinity.

}; .qsp.use ``state!(::; `recordHigh`recordLow!(-0w; 0w))] -

You can now redefine the pipeline, pass it multiple batches, and inspect the output.

publish "time,temp,humidity\n", "2024.07.01T12:00,81,74\n", "2024.07.01T13:00,82,70\n" publish "2024.07.02T12:00,87,73\n", "2024.07.02T13:00,90,74\n" outtime temp humidity dewpoint heatIndex recordHigh recordLow ----------------------------------------------------------------------------------- 2024.07.01D12:00:00.000000000 81 74 75.8 82.278 83.19 82.278 2024.07.01D13:00:00.000000000 82 70 76 83.19 83.19 82.278 2024.07.02D12:00:00.000000000 87 73 81.6 88.831 92.178 82.278 2024.07.02D13:00:00.000000000 90 74 84.8 92.178 92.178 82.278

Debugging UI Pipelines

Pipelines written in the UI, and run via Quick Test are evaluated in the scratchpad process. Therefore, any global variables cached in a UI pipeline are available in the scratchpad, and any global variables defined in a scratchpad are available in a pipeline process.

Because the hotkeys for evaluating code work in the pipeline editors, you can also step through UI pipeline functions in-place.

Interact with custom code

By using Packages you can add custom code to kdb Insights Enterprise for use in the following situations:

- In the Stream Processor when adding custom streaming analytics.

- In the Database for adding custom queries. The Scratchpad also has access to these APIs allowing you to load custom code and access user defined functions when developing Stream Processor pipelines or analytics for custom query APIs.

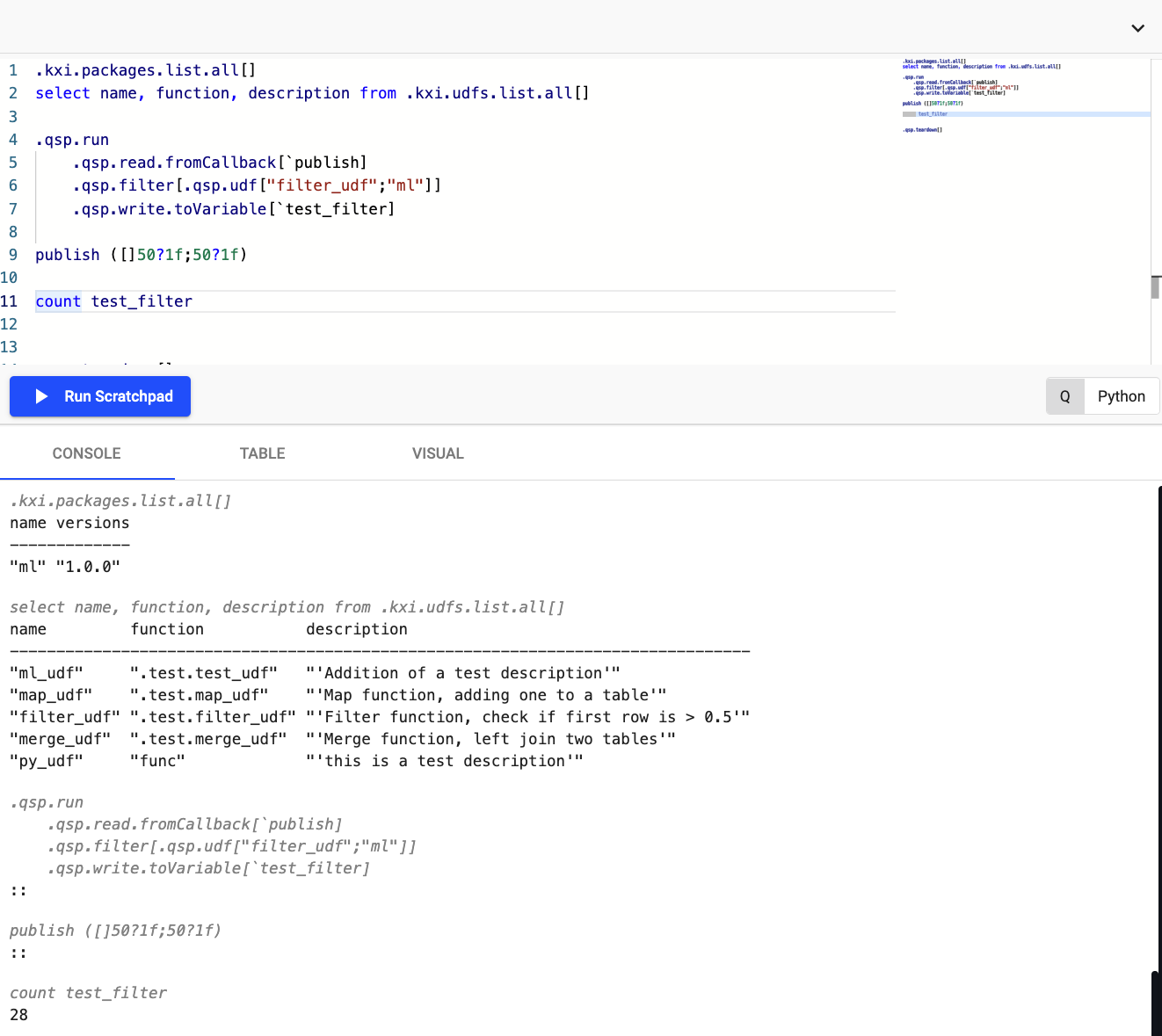

The following shows an example of a Scratchpad workflow which utilizes both the packages and a UDF API available within the Scratchpad.

This example shows a package named "ml", containing a function "filter_udf" that returns any rows where the first value is greater than 0.5. Passing the pipeline a table of 50 rows, containing random values between 0 and 1, it is expected that about half the rows pass through the filter node and are written to test_filter.

Develop machine learning workflows

The Scratchpad has access to a variety of machine learning libraries created by KX. The Machine Learning Core APIs are included with all running Scratchpads. These APIs provide you with access to the following:

- Data preprocessing functionality and ML models/analytics designed specifically for streaming and time-series use-cases.

- ML Model Registry which provides a cloud storage location for ML models generated in q/Python.

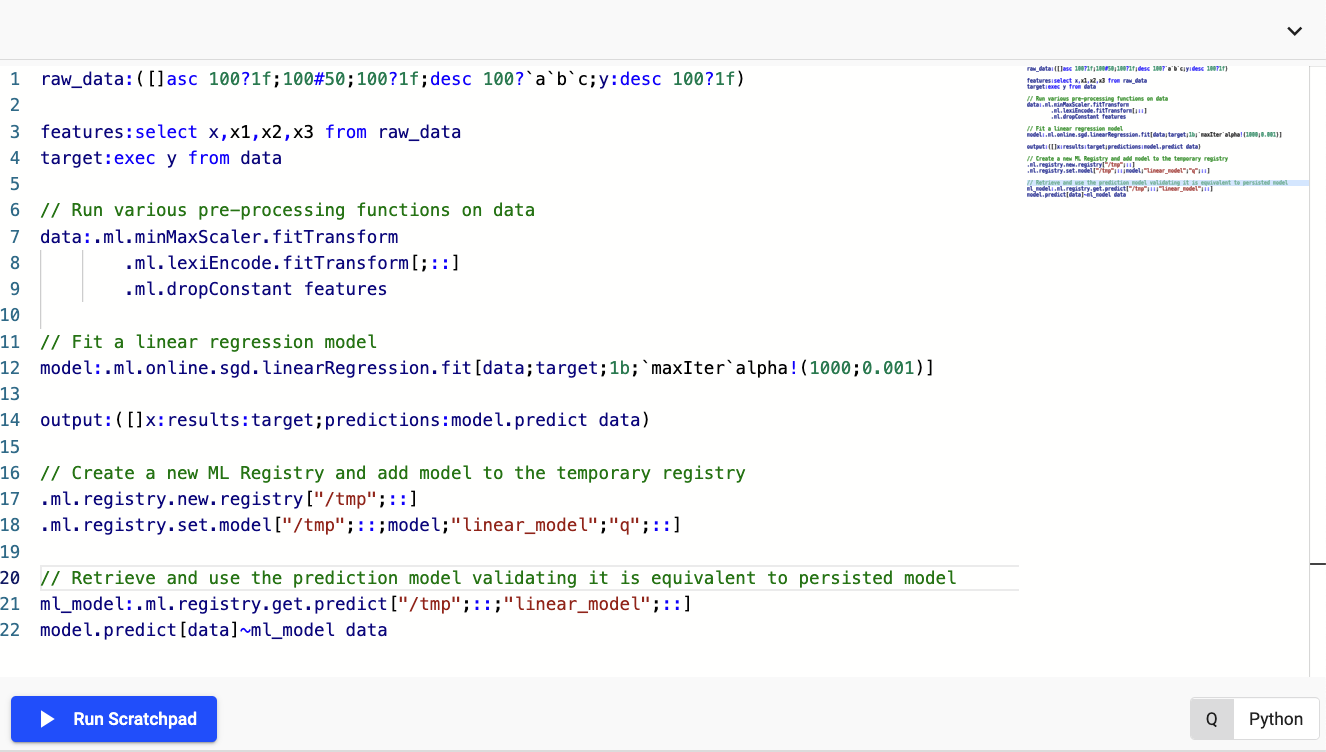

The example below shows how you can use this functionality to preprocess data, fit a machine learning model, and store this ephemerally within your Scratchpad session. (Note that storing of models in this manner results in them being lost upon restarting the Scratchpad pod).

Code snippet for ML Scratchpad example

Use the following code to replicate the behavior illustrated in the screenshot above:

raw_data:([]asc 100?1f;100#50;100?1f;desc 100?`a`b`c;y:desc 100?1f)

features:select x,x1,x2,x3 from raw_data

target:exec y from raw_data

// Run various pre-processing functions on data

data:.ml.minMaxScaler.fitTransform

.ml.lexiEncode.fitTransform[;::]

.ml.dropConstant features

// Fit a linear regression model

model:.ml.online.sgd.linearRegression.fit[data;target;1b;`maxIter`alpha!(1000;0.001)]

output:([]x:results:target;predictions:model.predict data)

// Create a new ML Registry and add model to the temporary registry

.ml.registry.new.registry["/tmp";::]

.ml.registry.set.model["/tmp";::;model;"linear_model";"q";::]

// Retrieve and use the prediction model validating it is equivalent to persisted model

ml_model:.ml.registry.get.predict["/tmp";::;"linear_model";::]

model.predict[data]~ml_model data

Include Python in your q code

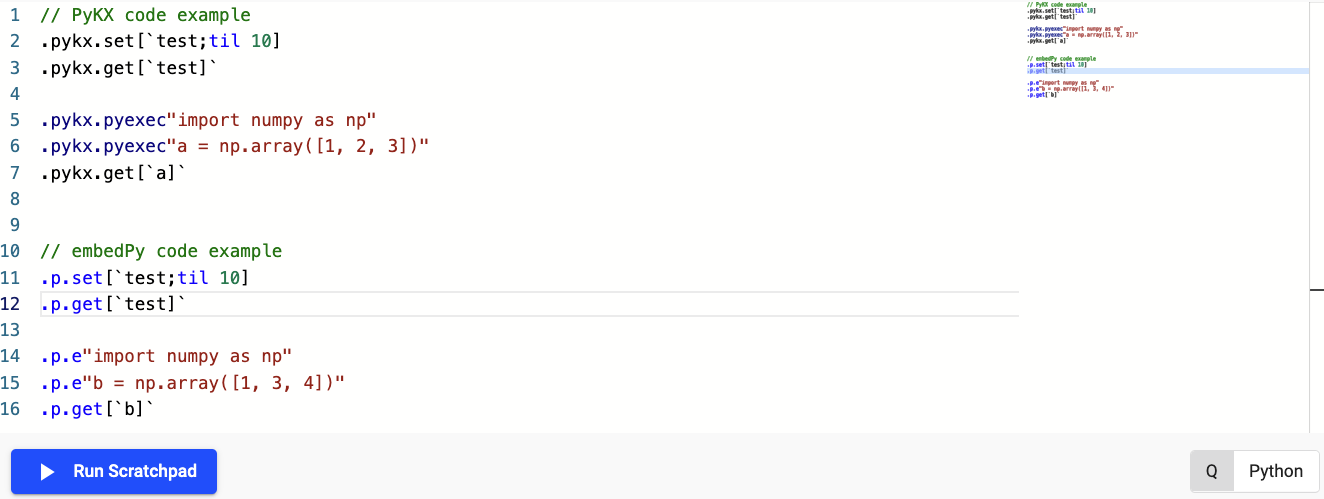

You can include Python functionality within your q code using embedPy or PyKX. Depending on your use-case or familiarity with these APIs you are free to use and interchange both APIs, however it is strongly suggested that usage of the embedPy functionality be reserved for historical code integration while any new code development targets the PyKX equivalent API.

The following basic example shows usage of both the embedPy and PyKX q APIs to generate and use callable Python objects.

Code snippet for Python DSL use in Scratchpad

Use the following code to replicate the behavior illustrated in the screenshot above:

// PyKX code example

.pykx.set[`test;til 10]

.pykx.get[`test]`

.pykx.pyexec"import numpy as np"

.pykx.pyexec"a = np.array([1, 2, 3])"

.pykx.get[`a]`

// embedPy code example

.p.set[`test;til 10]

.p.get[`test]`

.p.e"import numpy as np"

.p.e"b = np.array([1, 3, 4])"

.p.get[`b]`

For more comprehensive Python development, refer to the the Python development page here.

Known Issues

- STDOUT and STDERR are not shown in the console, so the result of using

show,-1, or-2is not shown.