KX for Databricks

This page briefly describes PyKX on Databricks use cases and capabilities.

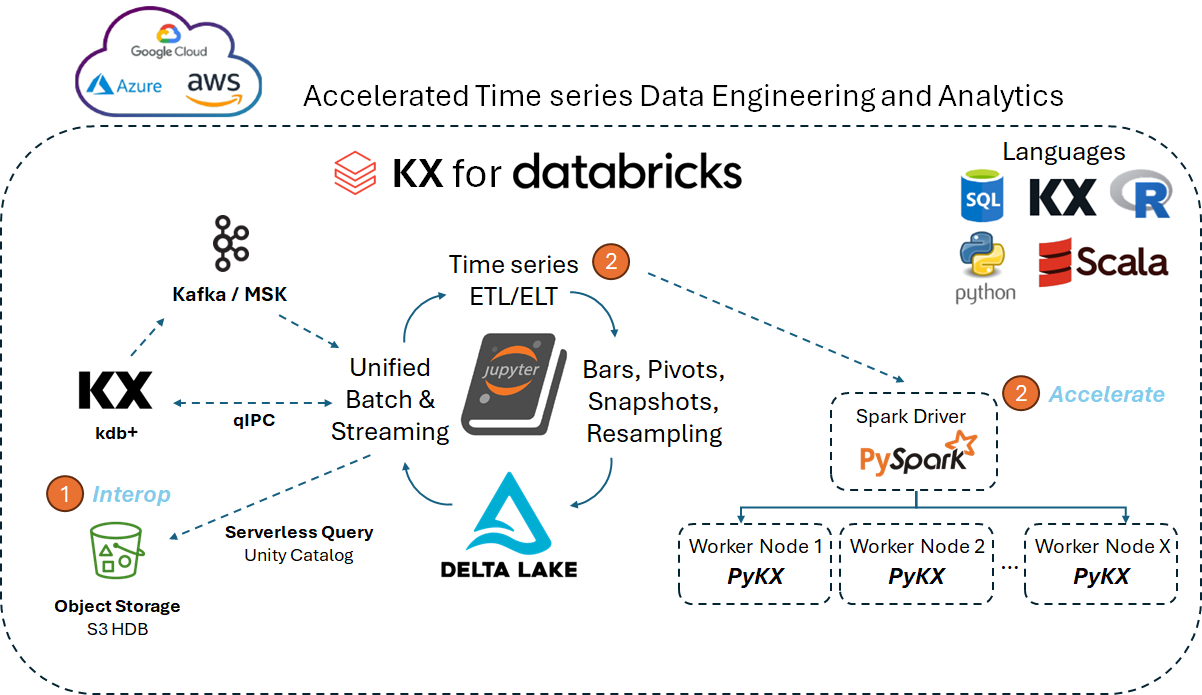

KX for Databricks brings the full capability of KX's time series engine to Databricks. KX's PyKX, the Python-first interface already familiar to data scientists and quants, turbocharges workloads and research on use cases such as:

- Algorithmic trading strategy development and backtesting

- Large-scale pre- and post-trade analytics

- Macro-economic research and analysis using large datasets

- Cross-asset analytics with deep historical data

KX for Databricks leverages PyKX directly inside Databricks, for Python and distributed Spark workloads. Use it on existing Delta Lake datasets without external KX dependencies, or choose to flexibly interop with other KX products to leverage the power of your broader KX systems estate.

Get started and workflows

Refer to the Get started page if you wish to complete the following actions:

- Install PyKX on Databricks.

- Check what type of license you need.

- Load data using Spark Dataframes.

- Use PyKX pythonic vs. q magic (%%).

Next, learn how to set up a cluster, depending on the type of workflow you need:

- Work with a single node/driver on analytic calculation of OHLC, VWAP pricing, volatility, spread, and trade execution analysis (slippage).

- Work with a multi/distributed nodes cluster on slippage calculation with PySpark.