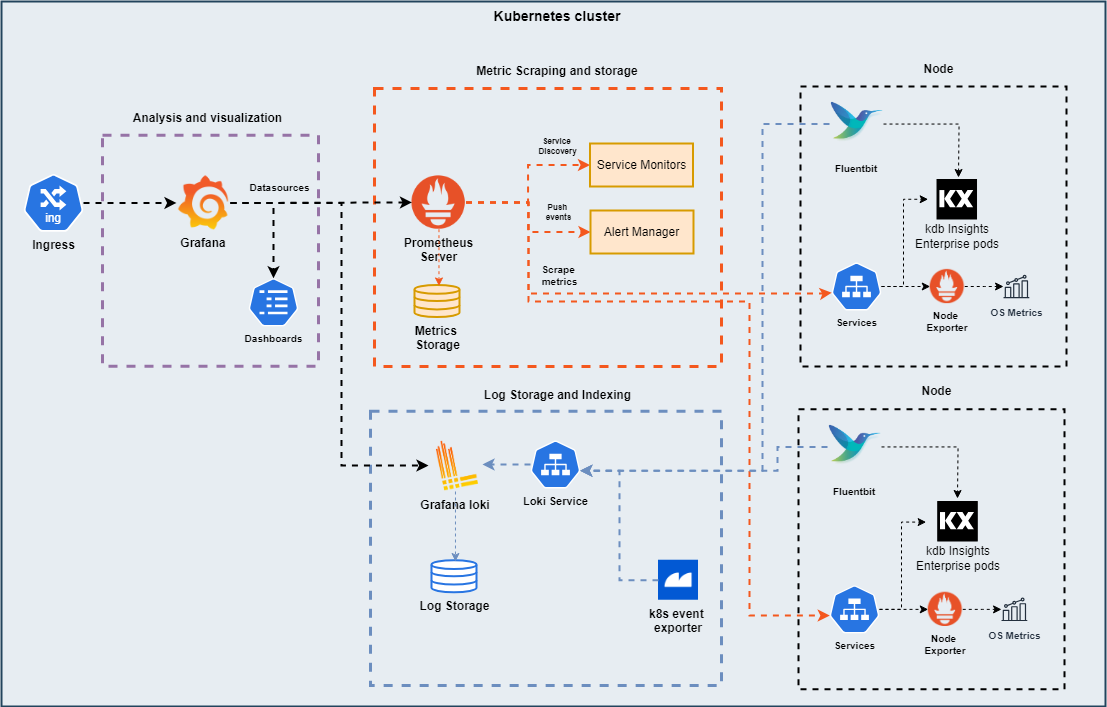

Example Monitoring Stack

This page guides you through deploying an example monitoring stack.

In this example, you will learn how to use:

- Grafana for analysis and visualization

- Prometheus for metric scraping and storage

- Grafana loki for log storage

Prerequisites

Before you begin, ensure the following are in place:

- kubectl

andhelm` are available on your system. - You can access your kubernetes cluster with

kubectland create a dedicated namespace for monitoring. This namespace contains all monitoring related resources.

kubectl create namespace "$MONITORING_NAMESPACE"

Deploy Grafana and Prometheus

To deploy Grafana and Prometheus in our example, we are using the kube-prometheus helm chart. It is an open-source umbrella chart to deploy Grafana, Prometheus and related resources to a kubernetes cluster. You can find the full list of components it deploys here.

Add and update the helm repository with the following command:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update prometheus-community

The following sections provide example values for the kube-prometheus-stack 65.1.1 version. For each relevant subchart, use the following variables:

| Variable | Description |

|---|---|

${CHART_VERSION} |

The chart version of kube-prometheus stack. These values files were tested with 65.1.1. |

${GRAFANA_ADMIN_USER} |

The admin username for your Grafana instance. |

${GRAFANA_ADMIN_PASSWORD} |

The admin password for your Grafana instance. |

${INSIGHTS_URL} |

The URL to your Insights instance without the HTTP(s) scheme. |

${INSIGHTS_CLUSTER_NAME} |

The name of your Kubernetes Cluster. Metrics will have it as a label. |

${MONITORING_NAMESPACE} |

The monitoring namespace that was created previously. |

Grafana values

You can find the full guide on customizing the Grafana deployment here.

The values file below highlights settings that should be configured:

- Expose Grafana through an ingress on https://insights-host/grafana

- Set custom admin credentials defined in the secret

- Set Loki as a data source

- Search for dashboards in all namespaces (that are persisted as config maps)

Firstly, create a kubernetes secret:

apiVersion: v1

kind: Secret

metadata:

name: grafana-admin-credentials

namespace: ${MONITORING_NAMESPACE}

stringData:

admin-user: ${GRAFANA_ADMIN_USER}

admin-password: ${GRAFANA_ADMIN_PASSWORD}

This is referenced in the values file of Grafana:

grafana:

admin:

existingSecret: grafana-admin-credentials # References the secret created previously

passwordKey: admin-password

userKey: admin-user

datasources: # Adds Loki as a datasource

datasources.yaml:

apiVersion: 1

datasources:

- jsonData:

httpHeaderName1: X-Scope-Origin

name: Loki

secureJsonData:

httpHeaderValue1: "1"

type: loki

uid: loki

url: http://loki-gateway.${MONITORING_NAMESPACE}.svc.cluster.local:80

access: proxy

defaultDashboardsEnabled: true

grafana.ini:

server:

domain: ${INSIGHTS_URL}

serve_from_sub_path: true

root_url: https://${INSIGHTS_URL}/grafana

ingress:

enabled: true

ingressClassName: nginx

annotations:

nginx.ingress.kubernetes.io/proxy-read-timeout: "900"

hosts:

- ${INSIGHTS_URL}

path: /grafana

pathType: ImplementationSpecific

persistence:

enabled: true

sidecar:

dashboards:

searchNamespace: ALL

provider:

foldersFromFilesStructure: true

Prometheus values

You can find the values to customize the Prometheus server and operator here under the prometheus: section.

The values file below highlights the following settings that should be configured:

- Enrich metrics with the name of the cluster

- Set retention for metrics

- Select all ServiceMonitors for service discovery

prometheus:

prometheusSpec:

externalLabels:

cluster: ${INSIGHTS_CLUSTER_NAME}

retention: 4w

retentionSize: 45GB

serviceMonitorSelector: {}

serviceMonitorSelectorNilUsesHelmValues: false

Deploy

To deploy the kube-prometheus-stack, combine the previous examples into a single values.yaml file and execute:

helm install \

kx-prom prometheus-community/kube-prometheus-stack \

-n "$MONITORING_NAMESPACE" \

-f values.yaml \

--version "$CHART_VERSION"

Once the deployment finishes, go to https://insights-host/grafana and log in with your admin credentials. At this point Grafana and Prometheus are deployed to your cluster, but Prometheus may not find services to scrape for metrics. If this happens, you should check if the service monitors for kdb Insights Enterprise are enabled.

Service monitors

Prometheus uses a pull based model for collecting metrics from applications and services. This means the applications and services must expose a HTTP(s) endpoint containing Prometheus formatted metrics. Prometheus then, as per its configuration, periodically scrapes metrics from these HTTP(s) endpoints.

The Prometheus operator includes a Custom Resource Definition that allows the definition of the ServiceMonitor. The ServiceMonitor is used to define an application you wish to scrape metrics from within Kubernetes. The controller actions the ServiceMonitors you define and automatically builds the required Prometheus configuration.

Within the ServiceMonitor we specify the Kubernetes labels that the Operator can use to identify the Kubernetes Service, which in turn then identifies the Pods that you wish to monitor.

Find out more about ServiceMonitors in kdb Insights Enterprise here.

Rook Ceph

If your deployment uses rook-ceph, you can verify if ServiceMonitors for rook was enabled by executing:

helm get values rook-ceph -n rook-ceph > values.yaml

monitoring.enabled should be set to true.

You can find how to enable monitoring for rook-ceph here.

Deploy Loki

Loki is a horizontally scalable, highly available, multi-tenant log aggregation system inspired by Prometheus. It is designed to be very cost effective and easy to operate. It does not index the contents of the logs, but rather a set of labels for each log stream.

Loki is a complex system with many configuration options. Read more about Loki here to make the best decision for your requirements.

Loki can be installed by the helm chart found here.

Depending on your cluster, you first need to decide on a log storage backend. Options are documented here.

Logs from kdb Insights Enterprise come with many labels per series, so the maximum label names needs to be increased as follows in the helm values during installation:

loki:

structuredConfig:

limits_config:

max_label_names_per_series: 40

Deploy Fluent Bit

Insights works with many logging stacks through Fluent Bit. Fluent Bit is a very fast, lightweight, and highly scalable logging and metrics processor and forwarder that you can configure it to forward logs to Loki. Please go here for more info.

Deploying a simple Fluent Bit config is shown below using the following variables - more configuration info available here:

| Variable | Description |

|---|---|

${CHART_VERSION} |

The chart version of Fluent Bit. This values file was tested with 3.1.9. |

${MONITORING_NAMESPACE} |

The monitoring namespace that was created previously. |

-

Add the following helm repository:

helm repo add fluent https://fluent.github.io/helm-charts helm repo update fluent -

Fluent Bit runs as a DaemonSet on the cluster, to ensure that it is started on every node create a priority class:

apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: fluentbit-priority value: 1000 globalDefault: false description: "Priority class for Fluent Bit DaemonSet" -

An example Fluent Bit (version 3.1.9) values file looks like:

Fluent Bit valuesconfig: customParsers: | [PARSER] Name cri Format regex Regex ^(?<time>[^ ]+) (?<stream>stdout|stderr) (?<logtag>[^ ]*) (?<message>.*)$ Time_Key time Time_Format %Y-%m-%dT%H:%M:%S.%L%z service: | [SERVICE] Flush 1 Daemon Off Log_Level info Parsers_File /fluent-bit/etc/conf/custom_parsers.conf HTTP_Server On HTTP_Listen 0.0.0.0 HTTP_PORT 2020 Health_Check On inputs: | [INPUT] Name tail Path /var/log/containers/*.log Parser cri Tag kube.* Mem_Buf_Limit 256MB Skip_Long_Lines On filters: | [FILTER] Name kubernetes Match kube.* Merge_Log On Keep_Log Off K8S-Logging.Exclude On K8S-Logging.Parser On outputs: | [OUTPUT] name loki match kube.* host loki-gateway.${MONITORING_NAMESPACE}.svc.cluster.local port 80 labels job=fluentbit,namespace=$kubernetes['namespace_name'],pod=$kubernetes['pod_name'],container=$kubernetes['container_name'],host=$kubernetes['host'] auto_kubernetes_labels on Retry_Limit no_retries serviceMonitor: enabled: true labels: release: kx-prom resources: limits: cpu: 500m memory: 1Gi requests: cpu: 500m memory: 768Mi tolerations: - effect: NoSchedule operator: Exists priorityClassName: fluentbit-priority -

Execute the following to deploy it to the monitoring namespace:

helm upgrade --install fluent-bit fluent/fluent-bit \ -n "$MONITORING_NAMESPACE" -f values.yaml \ --version "$CHART_VERSION"

Deploy Kubernetes event exporter

Kubernetes event exporter allows exporting the often missed Kubernetes events to various outputs so that they can be used for observability or alerting purposes. You can configure it to send kubernetes events to Loki.

The following variables are needed:

| Variable | Description |

|---|---|

${CHART_VERSION} |

The chart version of Kubernetes-event-exporter. This values file was tested with 3.2.14. |

${MONITORING_NAMESPACE} |

The monitoring namespace that was created previously. |

To deploy kubernetes event exporter:

-

Add the following helm repository:

helm repo add bitnami https://charts.bitnami.com/bitnami helm repo update bitnami -

Set a receiver in the values.yaml to forward logs to Loki:

Kubernetes event exporter valuesfullnameOverride: "kubernetes-event-exporter" serviceAccount: create: true name: event-exporter config: logLevel: debug logFormat: json receivers: - name: "loki" loki: url: http://loki-gateway.${MONITORING_NAMESPACE}.svc.cluster.local/api/v1/push -

Execute the following to deploy it to the monitoring namespace:

helm install event-exporter bitnami/kubernetes-event-exporter \ -n "$MONITORING_NAMESPACE" \ -f values.yaml --version "$CHART_VERSION"

Azure Marketplace

Azure Marketplace deployments come with their own monitoring stack, relying on Azure Monitor and Azure Log Analytics. In case you prefer the example stack above, be sure to disable the Azure monitoring stack by executing:

az aks disable-addons -a monitoring -n "$CLUSTER_NAME" -g "$RESOURCE_GROUP"

Shipped dashboards

The kube-prometheus stack deploys many useful charts by itself to monitor the cluster's state.

In addition, kdb Insights Enterprise ships with a couple of predefined dashboards. The list of folders in Grafana includes the namespace into which kdb Insights Enterprise is deployed.

kdb Insights Enterprise provides a set of pre-configured alerts to help you monitor and maintain the health of kdb Insights Enterprise.