Import

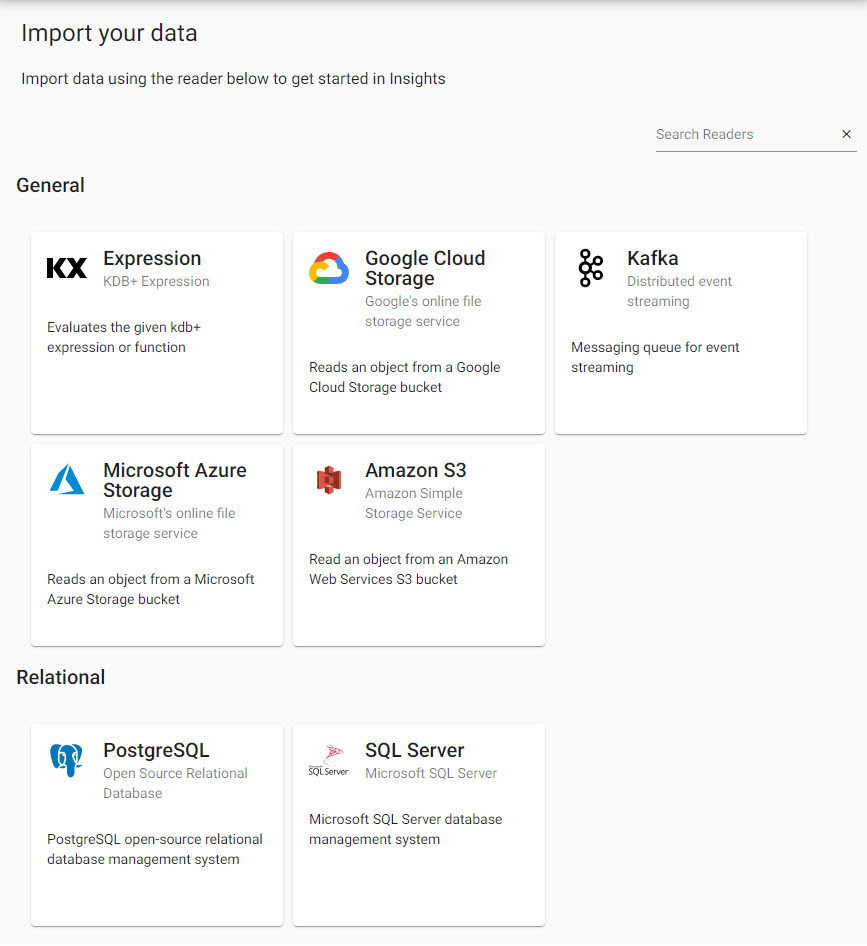

Import data from cloud and relational data services.

Set up

-

Select a data service.

-

Complete the workflow, then

Open Pipeline. -

Changes can be made in pipeline

Editmode before deploying. Pipelines can also be saved. -

Open an Explore tab to query the data.

Data Service

In the following examples, broker or server fields should include the appropriate Kubernetes namespace substring as required. Further information can be found here.

The Sample handle is the gui-data release service name and port, where insights is the release name in this context. This is the parameter used after the helm install command in the installation instructions for installing Insights chart, where the parameter value is set to insights.

Kafka

Connect to an Apache Kafka distributed event streaming platform. The video below gives an example of a Kafka import.

-

Define the Reader node with the Kafka broker details, port information and topic name.

Sample Kafka Reader Node

- Broker: kafka:9092

- Topic: trades

- Offset: Start

-

Define a JSON decoder node.

* Decode Each: false -

Define the table name to upsert the data too.

The Broker field can be the Kafka broker pod name when the Kafka broker is installed in the same Kubernetes namespace as the KX Insights Platform. If the Kafka cluster has been installed into a different Kubernetes namespace, then the field expects the full service name of the Kafka broker - i.e. kafka.default.svc.cluster.local:9092, where default is the namespace that the Kafka cluster is in. The full-service name is set in the KX_KAFKA_BROKERS environment variable property in the Kafka Producer Kubernetes installation instructions.

JSON Decoder Requirement

The JSON decoder expects to receive a JSON string that defines an array of objects that contain key value pairs, for example:

<column name>: <value> pairs, eg. "[{ column1: 1, column2: 1 }, { column1: 2, column2: 2 }]"Kdb Expression

The video below gives an example of a kdb expression import.

-

Generate some data using kdb in the Reader node:

n:2000; ([] date:n?(reverse .z.d-1+til 10); instance:n?`inst1`inst2`inst3`inst4; sym:n?`USD`EUR`GBP`JPY; account:n?("aa";"bb";"cc";"dd"); cnt:n?10) -

Define the table name to upsert the data too.

SQL Databases

Applicable to all supported SQL databases, including PostgreSQL and SQL Server.

The video below gives an example for a PostgreSQL import.

-

Define the Reader node, including any required authentication alongside server and port details.

Sample Postgres Reader Node

- Server: postgresql

- Port: 5432

- Database: testdb

- Username: testdbuser

- Password: testdbuserpassword

Where Server is

postgresql.default.svc, thedefaultis the namespace, e.g.dbwill bepostgresql.db.svcThe Reader node also includes the SQL query:

select * from carscase sensitive

Node properties and queries are case sensitive and should be lower case.

-

Define the table name to upsert the data too.

Cloud Storage Services

Applicable to all Cloud Service Readers, including Google Cloud Storage, Amazon S3 and Microsoft Azure Storage.

The following example is for an Amazon S3 storage service.

-

Define the Reader node with the S3 path details, how the file will be read and optionally the Kubernetes secret for authentication.

Sample Amazon 3 Reader Node

- Path: s3://bucket/numbers.txt

- Mode: Text

-

Define a CSV decoder node.

* Delimiter: "," * Header: Always * Schema = SS* -

Define the table name to upsert the data too.

Note that a Kubernetes secret is required if authenticating with Amazon S3. See the section on Amazon S3 Authentication for more details.