Object Storage (Weather)

No kdb+ knowledge required

This example assumes no prior experience with q/kdb+ and you can replace the url provided with any other Object Storage url to gain similar results.

Object storage is a means of storage for unstructured data, eliminating the scaling limitations of traditional file storage. Limitless scale is the reason object storage is the storage of the cloud; Amazon, Google and Microsoft all employ object storage as their primary storage.

Free Trial offers a sample weather dataset hosted on each of the major cloud providers. Select one of the cloud providers and enter the relevant details for that provider into the first input form.

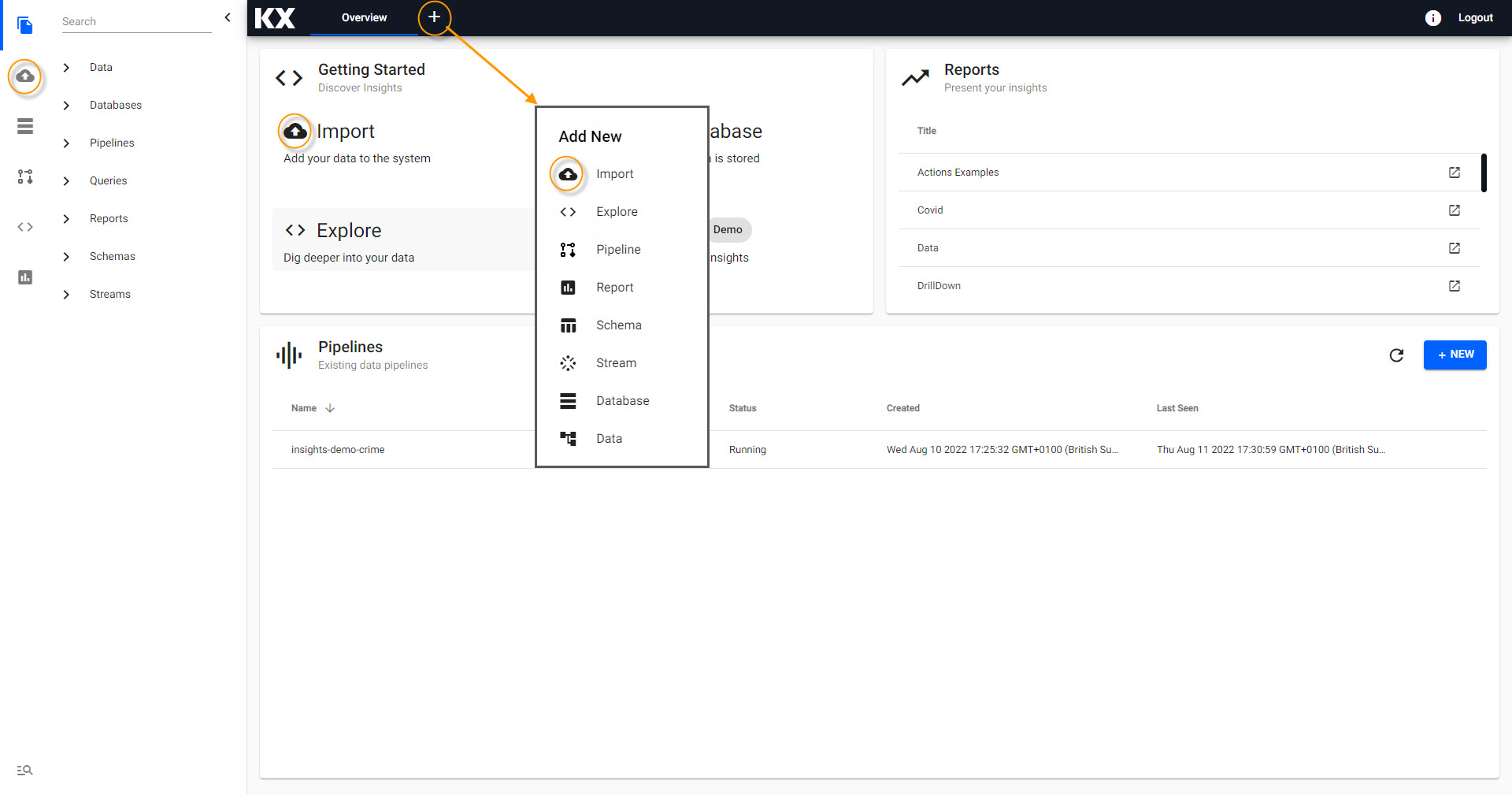

Creating the Pipeline

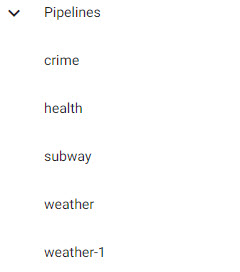

First select the import wizard; the import wizard can be accessed from the [+] menu of the Document bar or by clicking ![]() from the left-hand icon menu or Overview page. All created pipelines, irrespective of source, are listed under Pipelines in the left-hand entity tree menu.

from the left-hand icon menu or Overview page. All created pipelines, irrespective of source, are listed under Pipelines in the left-hand entity tree menu.

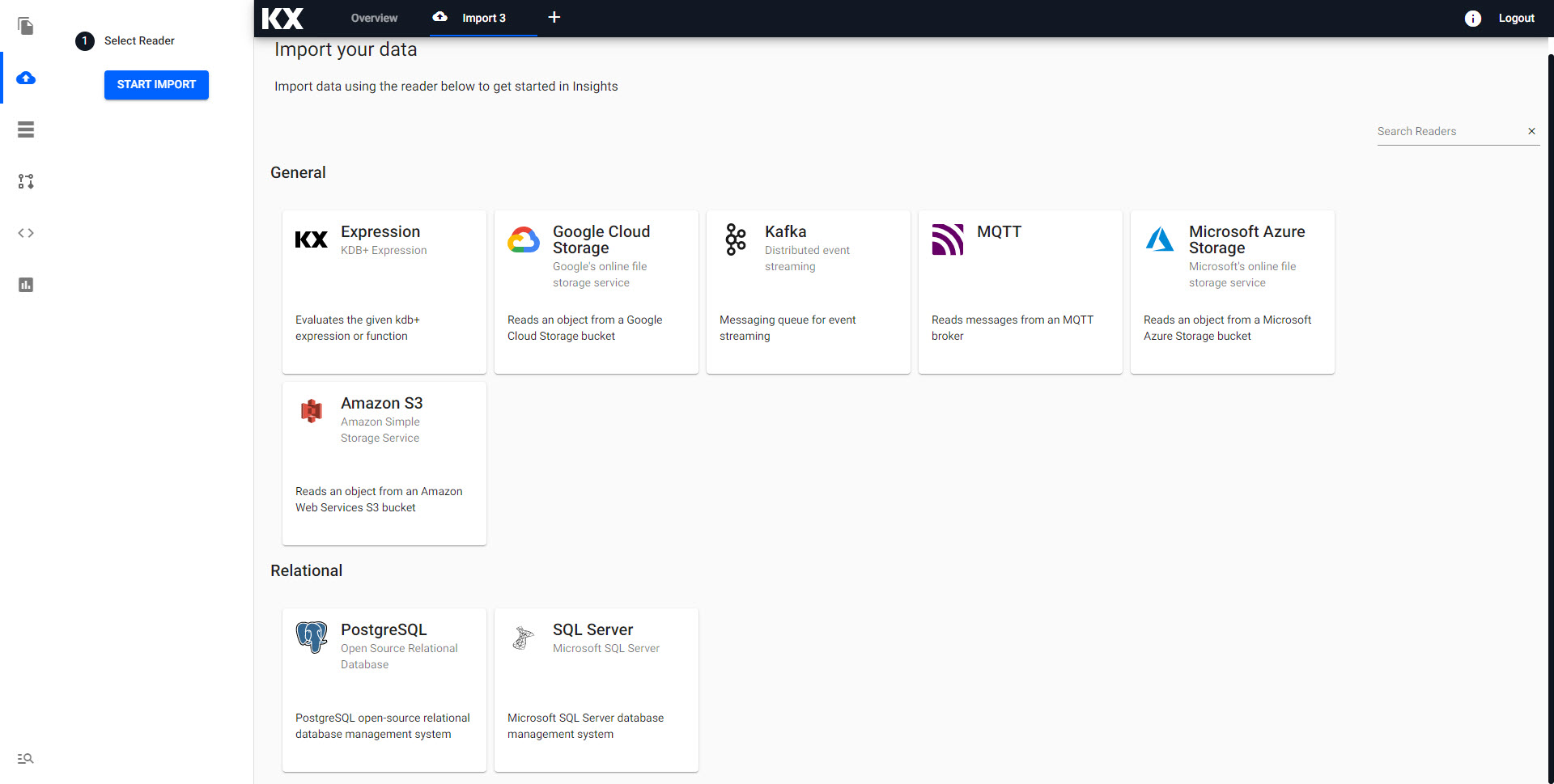

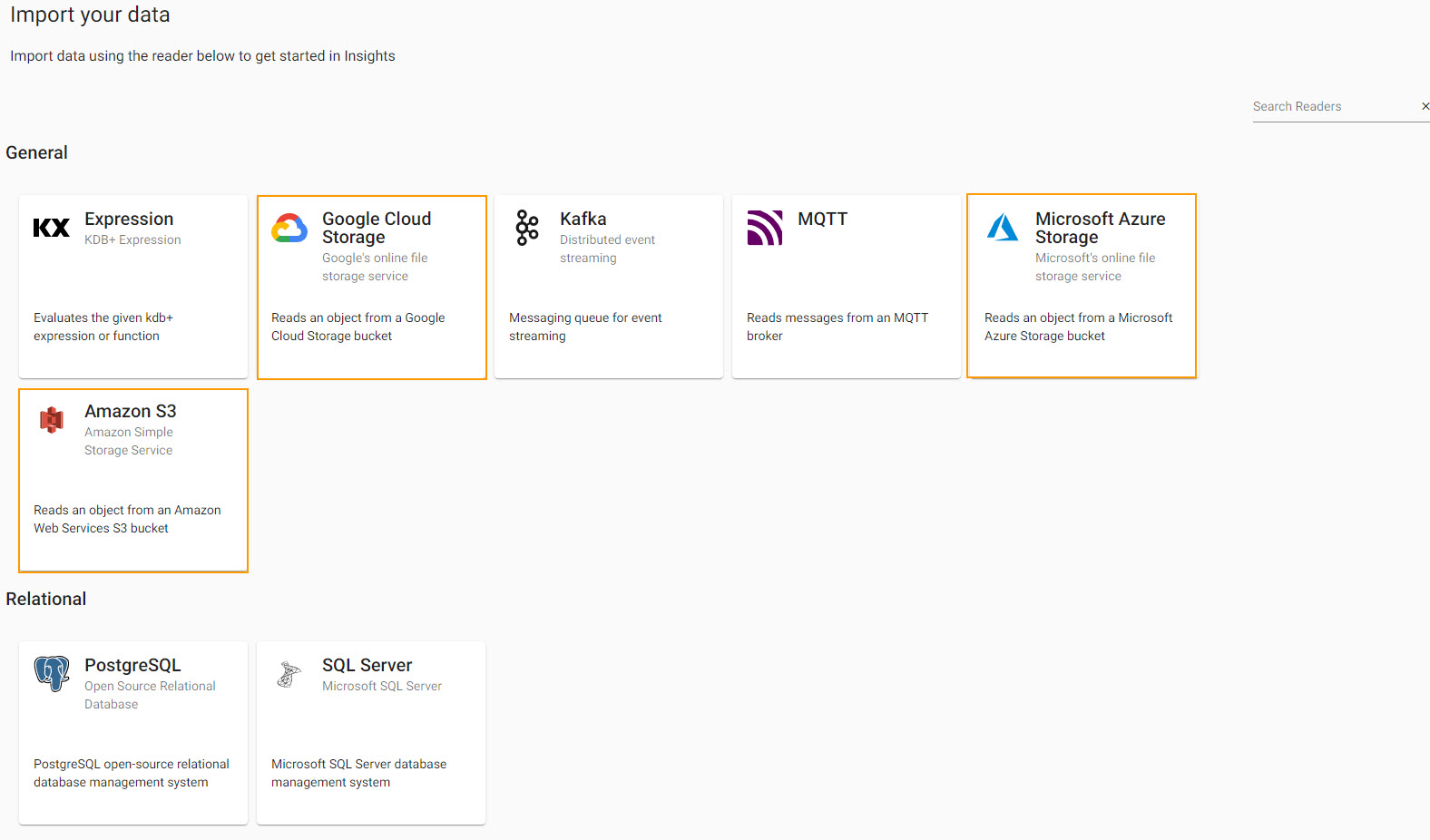

Select a Reader

Select one of the cloud providers and enter the relevant details for that provider from the import options

Configure a Reader

Enter the relevant details for that provider into the first input form.

| setting | value |

|---|---|

| Path* | gs://kxevg/weather/temp.csv |

| Project ID | kx-evangelism |

| File Mode* | Binary |

| Offset* | 0 |

| Chunking* | Auto |

| Chunk Size* | 1MB |

| Use Authentication | No |

| setting | value |

|---|---|

| Path* | ms://kxevg/temp.csv |

| Account* | kxevg |

| File Mode* | Binary |

| Offset* | 0 |

| Chunking* | Auto |

| Chunk Size* | 1MB |

| Use Authentication | No |

| setting | value |

|---|---|

| Path* | s3://kxevangelism/temp.csv |

| Region* | eu-west-1 |

| File Mode* | Binary |

| Offset* | 0 |

| Chunking* | Auto |

| Chunk Size | 1MB |

| Use Authentication | No |

Click Next when done.

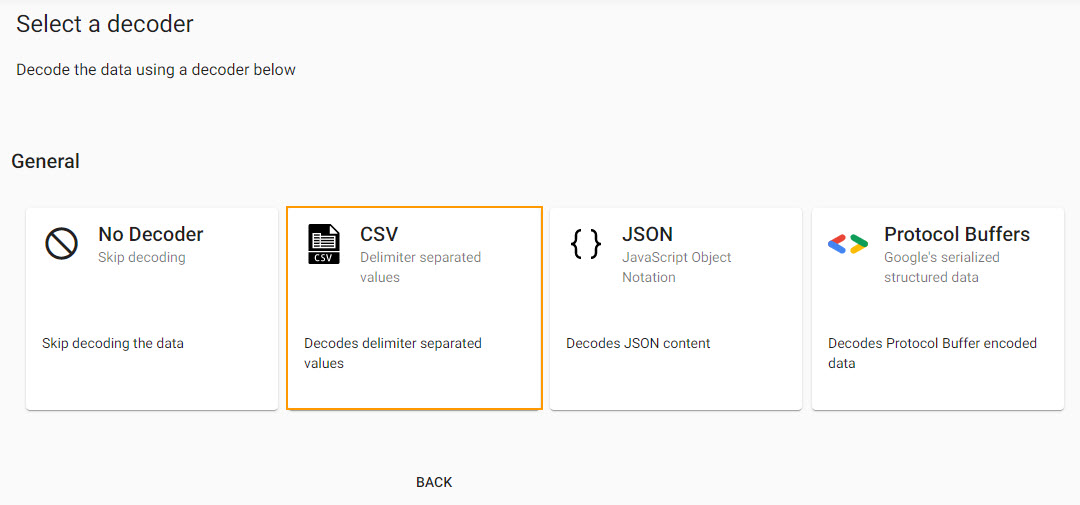

Select a Decoder

With the data source defined, next step defines a decoder which references the type of data we are importing. The source data is a csv file, so set the delimiter type - typically a comma for a csv file - and if the source data has a header row.

| setting | value |

|---|---|

| Delimiter | , |

| Header | First Row |

Click Next when done.

Configure Schema

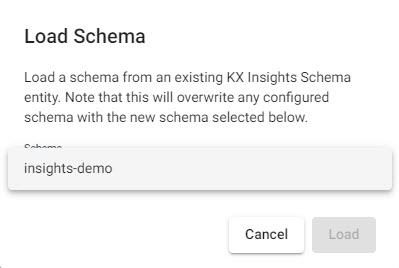

Imported data requires a schema compatible with the KX Insights Platform. The insights-demo has a predefined set of schemas for each of the data sets available in Free Trial, referenced in the schema table. The configure schema step applies the kdb+ datatypes used by the destination database and required for exploring the data in Insights, to the data we are importing.

The next step applies a schema to the imported data.

| setting | value |

|---|---|

| Apply a Schema | Checked |

| Data Format | Table |

To attach the schema to the data set:

-

Leave the Data Format as the default value of

Any -

Click

-

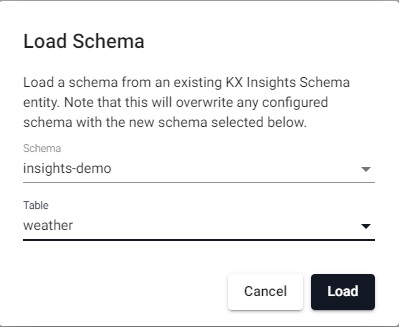

Select the

insights-demoSchema from the dropdown

-

Choose the Table

weather

-

Check Parse Strings for ALL columns. This is an essential step, and may cause issues during pipeline deployment if incorrectly set.

Parse Strings

This indicates whether parsing of input string data into other datatypes is required. When using a CSV Decoder, Parse Strings should be ticked for ALL columns.

Click Next when done.

Configure Writer

The final step in pipeline creation is to write the imported data to a table in the database. With Free Trial we will use the insights-demo database and assign the imported data to the weather table.

| setting | value |

|---|---|

| Database | insights-demo |

| Table | weather |

| Deduplicate Stream | Yes |

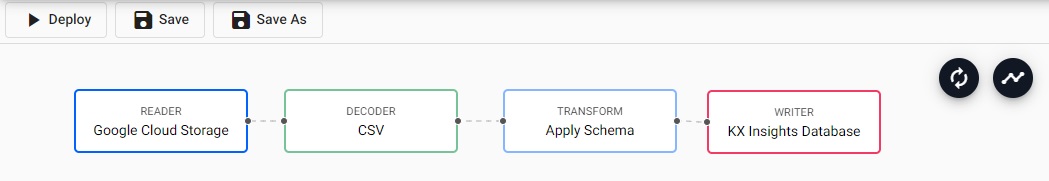

Click  when done. This will complete the import and open the pipeline in the pipeline viewer

when done. This will complete the import and open the pipeline in the pipeline viewer

Writer - KX Insights Database

The Writer - KX Insights Database node is essential for exploring data in a pipeline. This node defines which assembly and table to write the data too. As part of this, the assembly must also be deployed; deployments of an assembly or pipeline can de done individually, or a pipeline can be associated with an assembly and all pipelines associated with that assembly will be deployed when the latter is deployed.

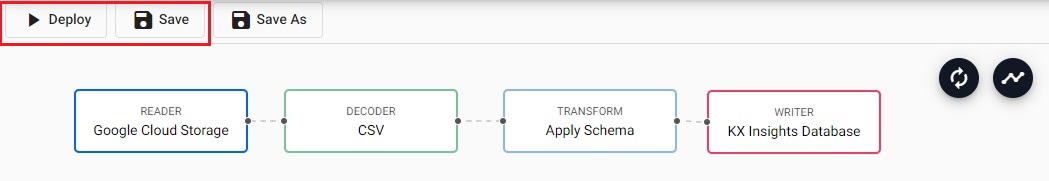

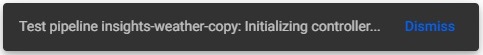

Pipeline view

The pipeline view allows you to review and edit your pipeline by selecting any of the nodes. However, this is not required as part of our Free Trial tutorial.

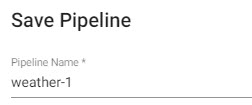

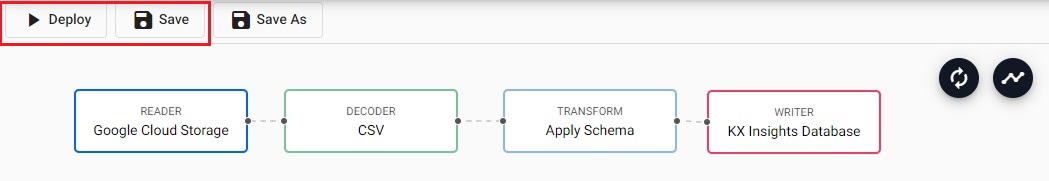

Click Save and give the pipeline a name. This name should be unique to the pipeline; for Free Trial, there is a pipeline already named weather, so give this new pipeline a name like weather-1.

The newly created pipeline will now feature in the list of pipelines in the left-hand-entity tree.

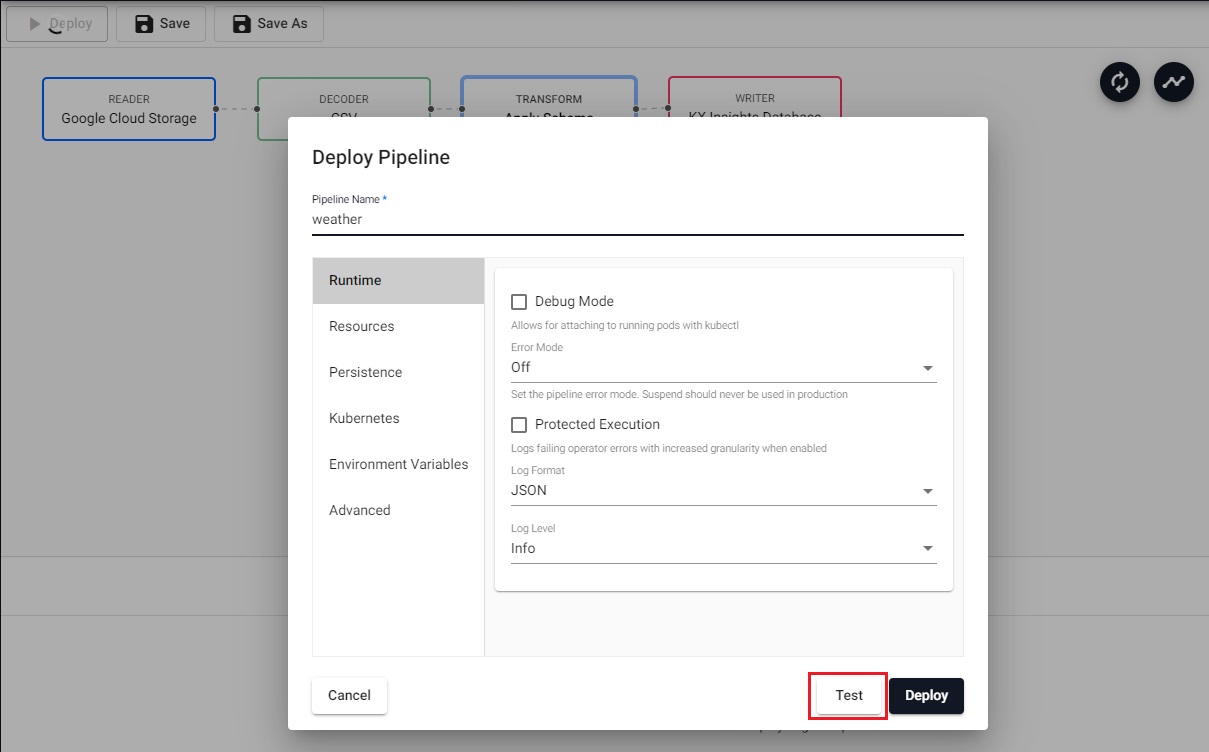

Testing the pipeline

The next step is to test deploy the pipeline that has been created in the above Importing stage; for Free Trial, there is a pipeline already named weather, so give this new pipeline a name like weather-1.

-

Save your pipeline giving it a name that is indicative of what you are trying to do.

-

Select Deploy.

-

Select Test on the the Deploy screen.

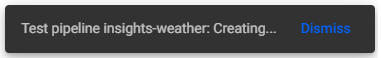

This will deploy your pipeline in a test mode, collect some of the data from the reader and run it through the steps prior to the writedown to the database.

Note

Any errors that occur in the writedown phase will not be picked up here and will only occur when the pipeline is deployed.

-

It is recommended to stay on this tab until the test completes. Assuming you have defined the pipeline correctly the following status popups will appear:

- Creating Pipeline

- Initializing Contoller

- Complete

-

Once the

Completepopup has been displayed you can check the results and any erroring nodes will be highlighted. Clicking on a node will display a table in the bottom panel, populated with the output from the test.

Note

The panel is not populated for Writer nodes or nodes that do not output the data in a table format.

I want to learn more about Pipelines

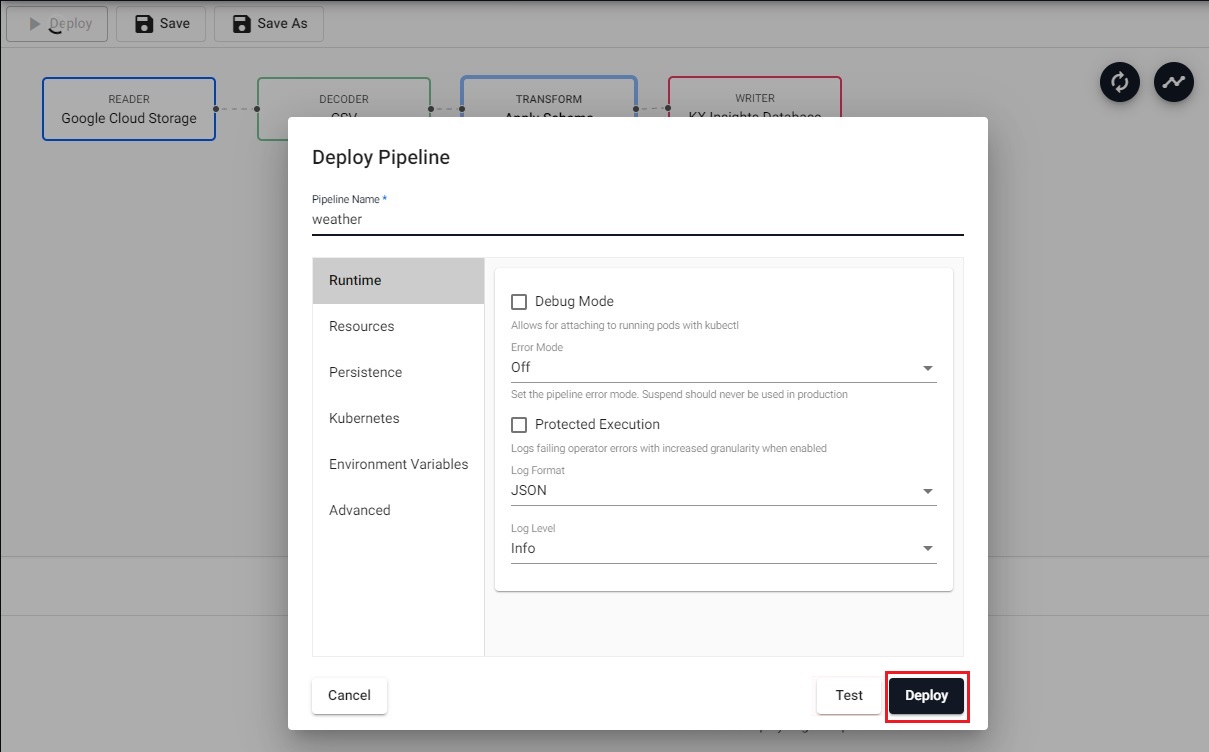

Deploying the pipeline

The next step is to deploy your pipeline; for Free Trial, there is a pipeline already named weather, so give this new pipeline a name like weather-1.

-

If you did not follow the

Teststep, or made changes since you last saved, you need to Save your pipeline giving it a name that is indicative of what you are trying to do. -

Select Deploy.

-

Select Deploy again on the Deploy screen.

-

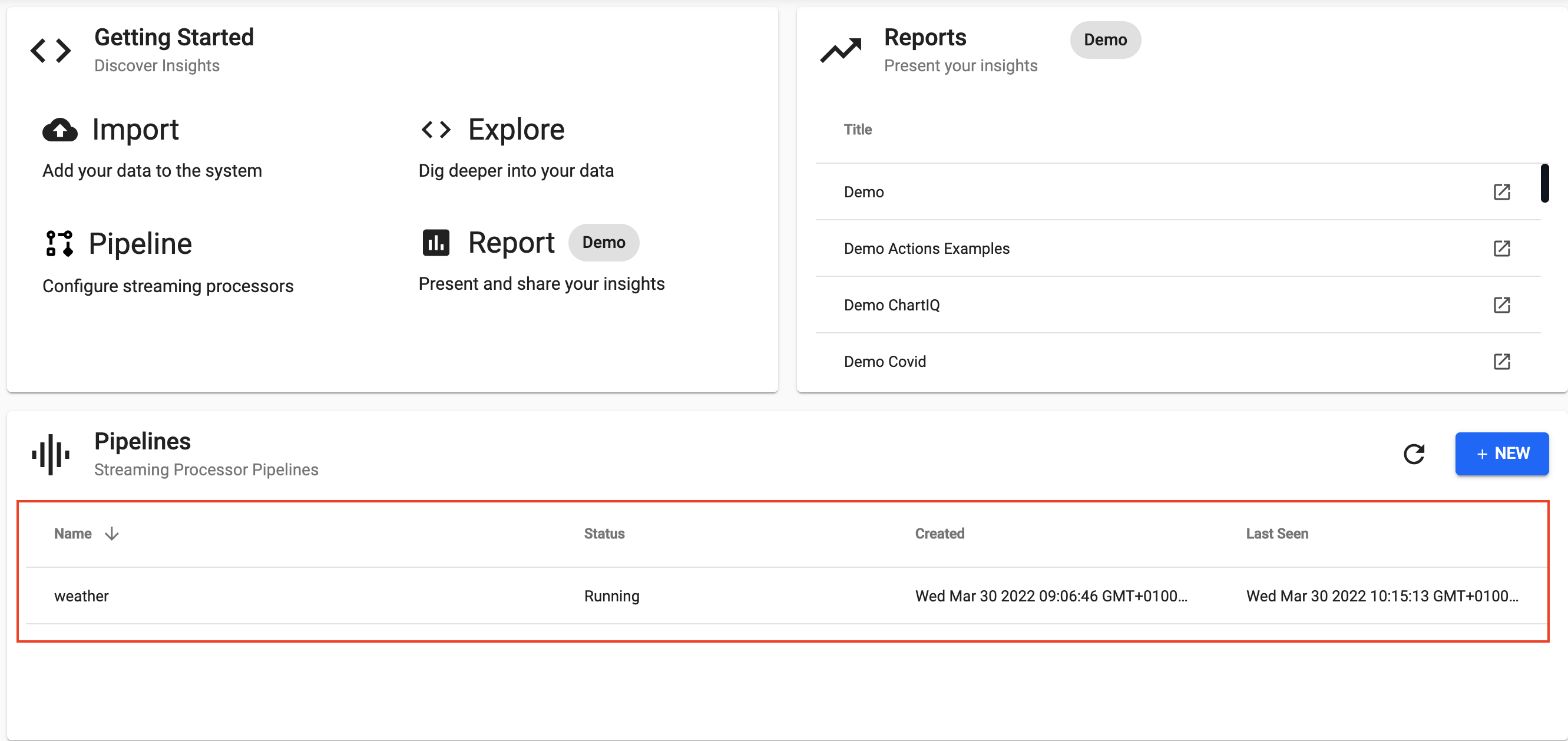

Once deployed you can check on the progress of your pipeline back in the Overview pane where you started.

-

When it reaches

Status=Runningthen it is done and your data is loaded.

I want to learn more about Pipelines

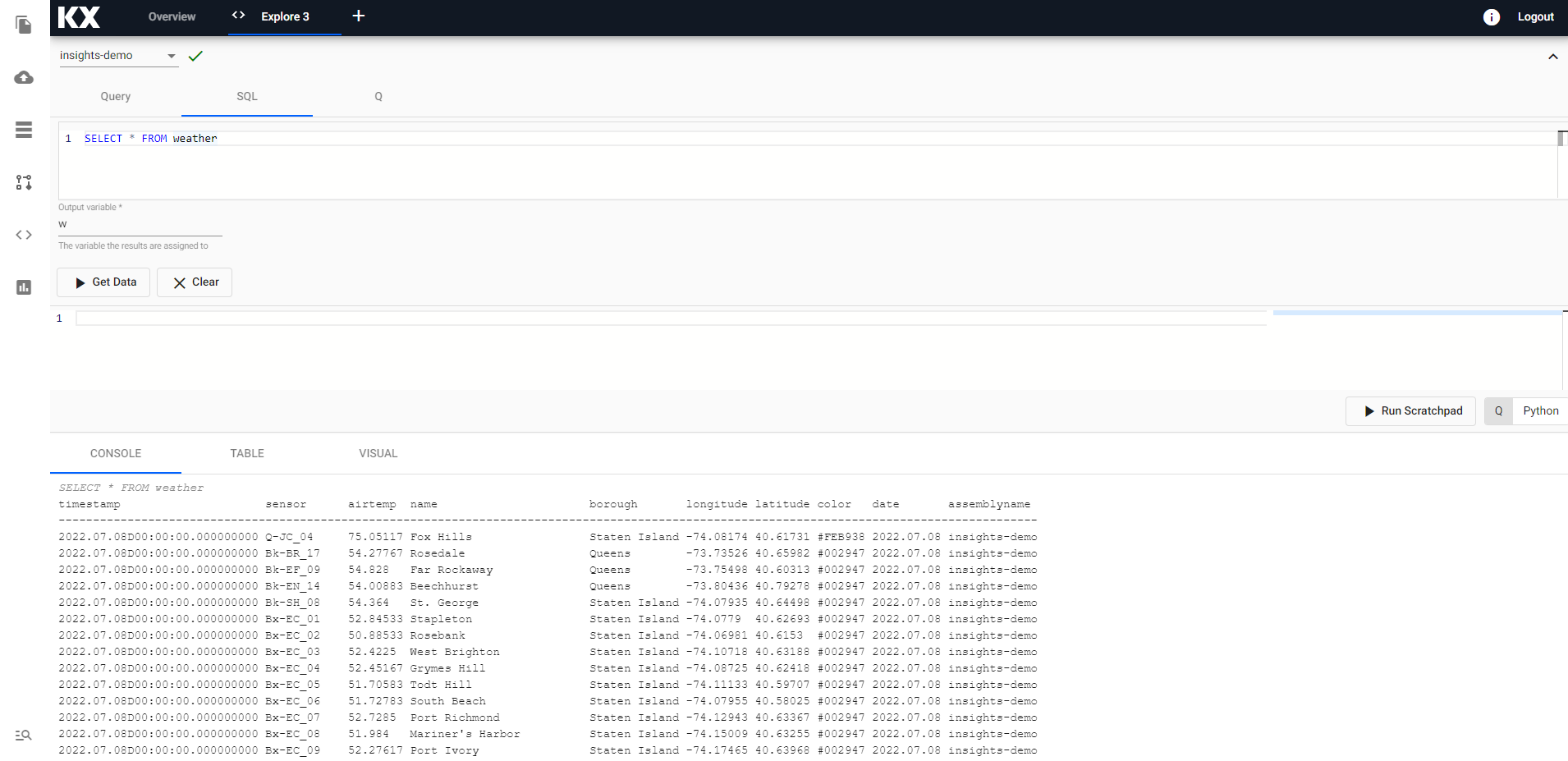

Exploring

Select Explore from the Overview panel to start the process of exploring your loaded data. See here for an overview of the Explore Window.

In the Explore tab there are a few different options available to you, Query, SQL and q. Let's look at SQL.

You can run the following SQL to retrieve all records from the weather table.

SELECT * FROM weatherNote that you will need to define an Output Variable and then select Get Data to execute the query.

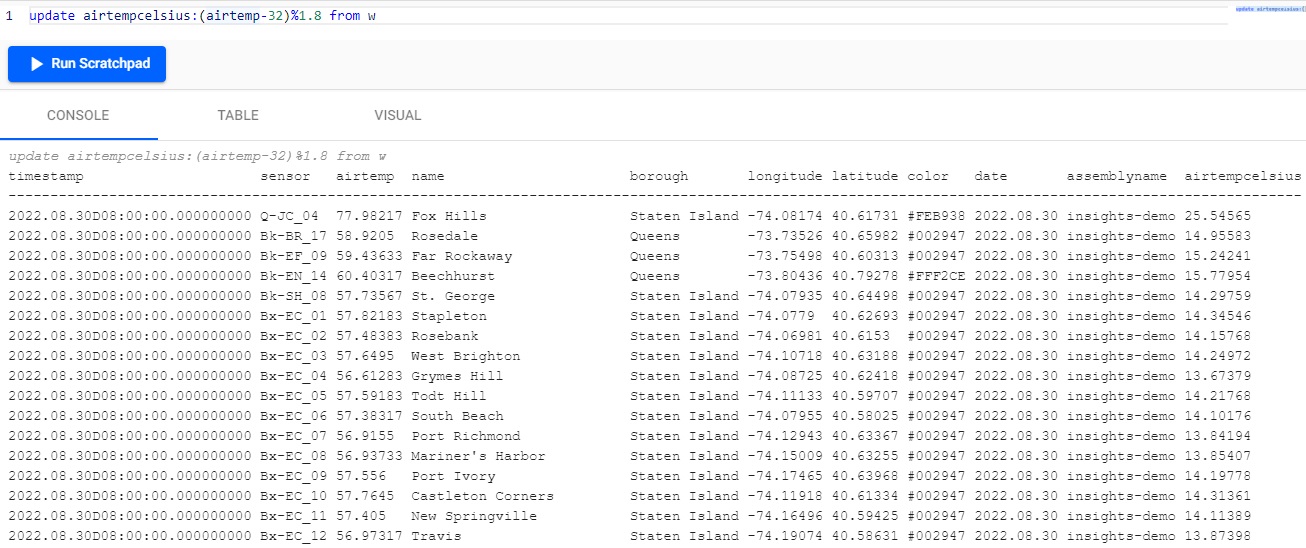

Now that we have outputted the weather table, we can see the airtemp column is in farenheit. To add a new column which shows the temperature in celcius, we can run the following code.

update airtempcelsius:(airtemp-32)%1.8 from w

-

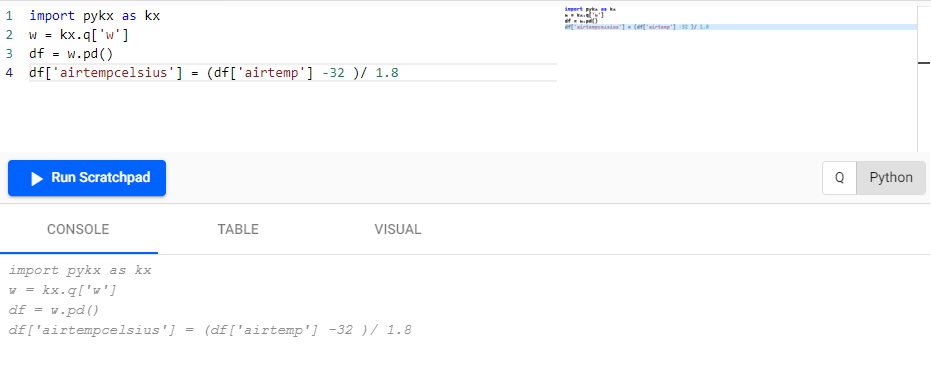

Add the airtempcelsius column:

import pykx as kx w = kx.q['w'] df = w.pd() df['airtempcelsius'] = (df['airtemp'] -32 )/ 1.8

-

Display the results:

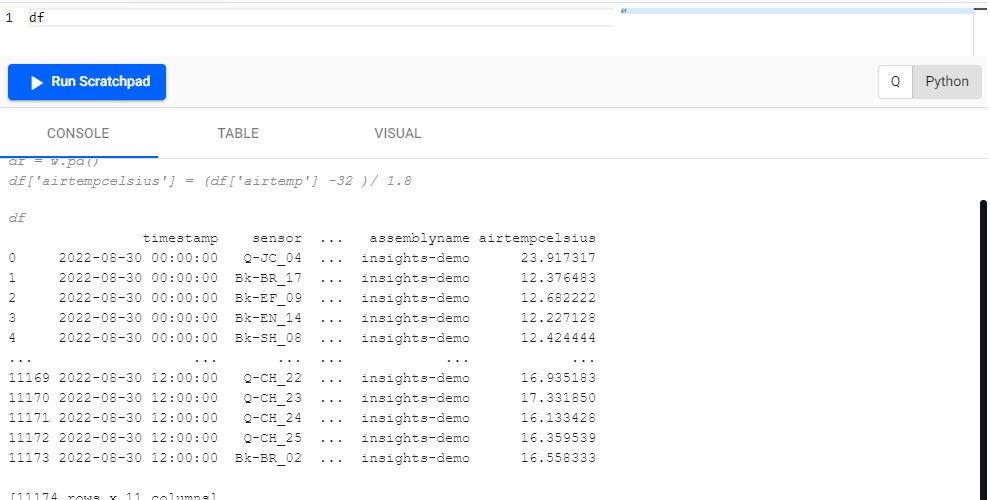

df

Tip

Run Scratchpad executes the whole scratchpad. The current line or selection can be run with the keyboard shortcut of CTRL + Enter, or ⌘Enter on Mac.

We can see that a new column is created called airtempcelsius!

Refresh Browser

If the tables are not appearing in the Explore tab as expected - it is a good idea to refresh your browser and retry.

Troubleshooting

If the tables are still not outputting after refreshing your browser, try our Troubleshooting page.

Let's ingest some more data! Try next with the live Kafka Subway Data.