Performance of Intel Optane persistent memory¶

Key findings

Use of Intel® Optane™ persistent memory (PMem) as a block-storage device with KX Streaming Analytics delivers 4× to 12x improved analytics performance compared to high-performance NVMe storage – similar performance to DRAM for query workloads. For key data-processing workloads, we found DRAM requirements were significantly reduced.

PMem lets organizations support more demanding analytic workloads on more data with less infrastructure.

Setup and evaluation¶

Hardware setup¶

We configured two systems:

| configuration | baseline without Optane |

with Optane |

|---|---|---|

| Server and operating system | Supermicro 2029U-TN24R4T Centos 8 | |

| RAM | 768 GB RAM (2666 MHz) LRDIMMs | |

| CPU | 36 physical cores: 2 × Intel® Xeon® Gold 6240L Gen.2 2.6 GHz CPU Hyper-threading turned on |

|

| Optane Persistent Memory | n/a | 12 DIMMs × 512 GB NMA1XXD512GPS |

| Log | RAID 50 data volume 24× NVMe P4510 NVME |

Intel Optane² persistent memory 3 TB EXT4³ DAX |

| Intraday Database ≤ 24 Hrs | 48 TB RAIDIX ERA¹ Raid Software, XFS 6 RAID 5 Groups, 64 chunk |

Intel Optane persistent memory 3 TB EXT4 DAX |

| Historical Database > 24 Hrs | RAID 50 data volume Same as baseline configuration |

|

Environment setup and testing approach¶

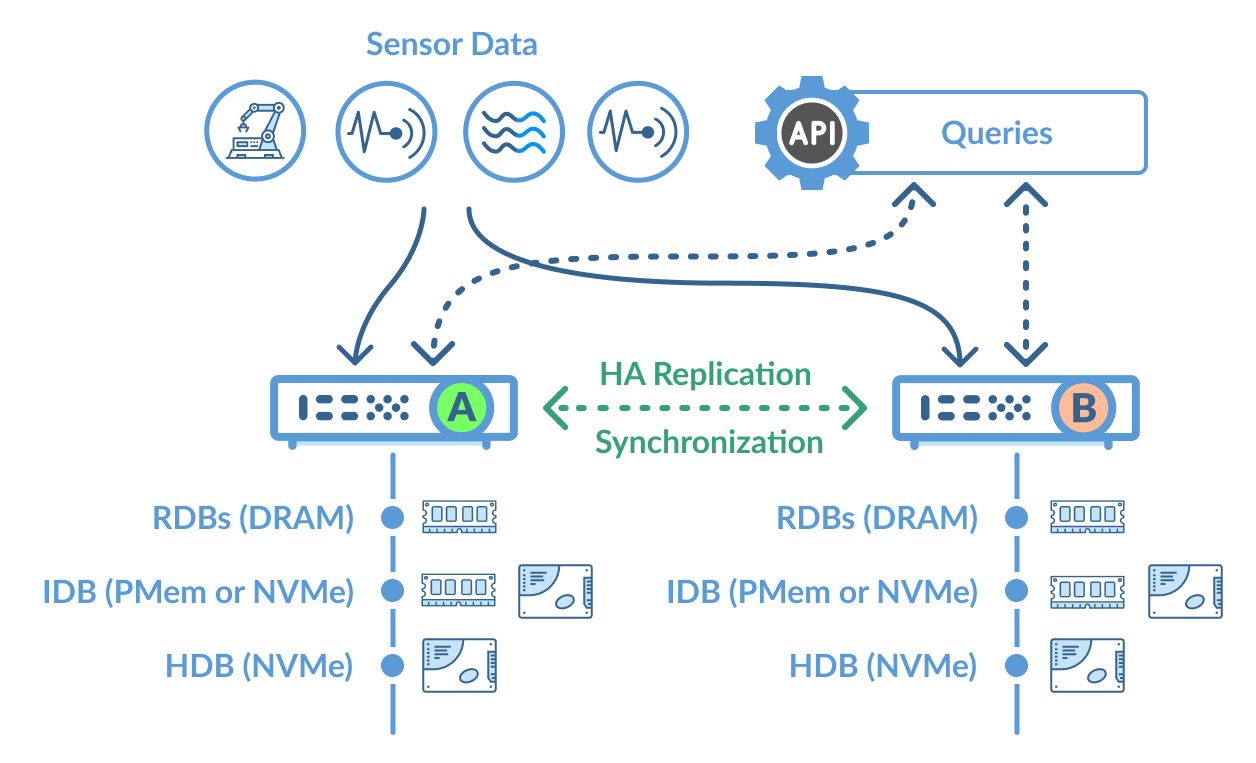

We configured a KX Streaming Analytics system operating in a high-availability (HA) cluster, processing and analyzing semiconductor manufacturing data as follows. We ran tests on both configurations for ingestion, processing, and analytics. Tests were run with the same data and durations.

Publishing and ingestion¶

- Publish and ingest over 2.25M sensor readings in 894 messages per second, 2.5 TB per day

- Ingest sensor trace, aggregation, event, and IoT data using four publishing clients from a semiconductor front-end processing environment

Analytics¶

- 81 queries per second spanning real-time data, intraday data (< 24 hours), and historical data

- 100 queries at a time targeting the real-time database (DRAM), intra-day database (on Intel Optane PMem), and historical database (on NVMe storage)

- Single-threaded calculation and aggregation tests targeted at the in-memory database and intra-day database

Data processing¶

- Perform a data-intensive process, entailing reading and writing all of the data ingested for the day

High availability and replication¶

- System ran 24×7 with real-time replication to secondary node

- Logged all data ingested, to support data protection and recovery

- Data fed to two nodes, mediated to ensure no data loss in event of disruption to the primary system

Data model and ingestion¶

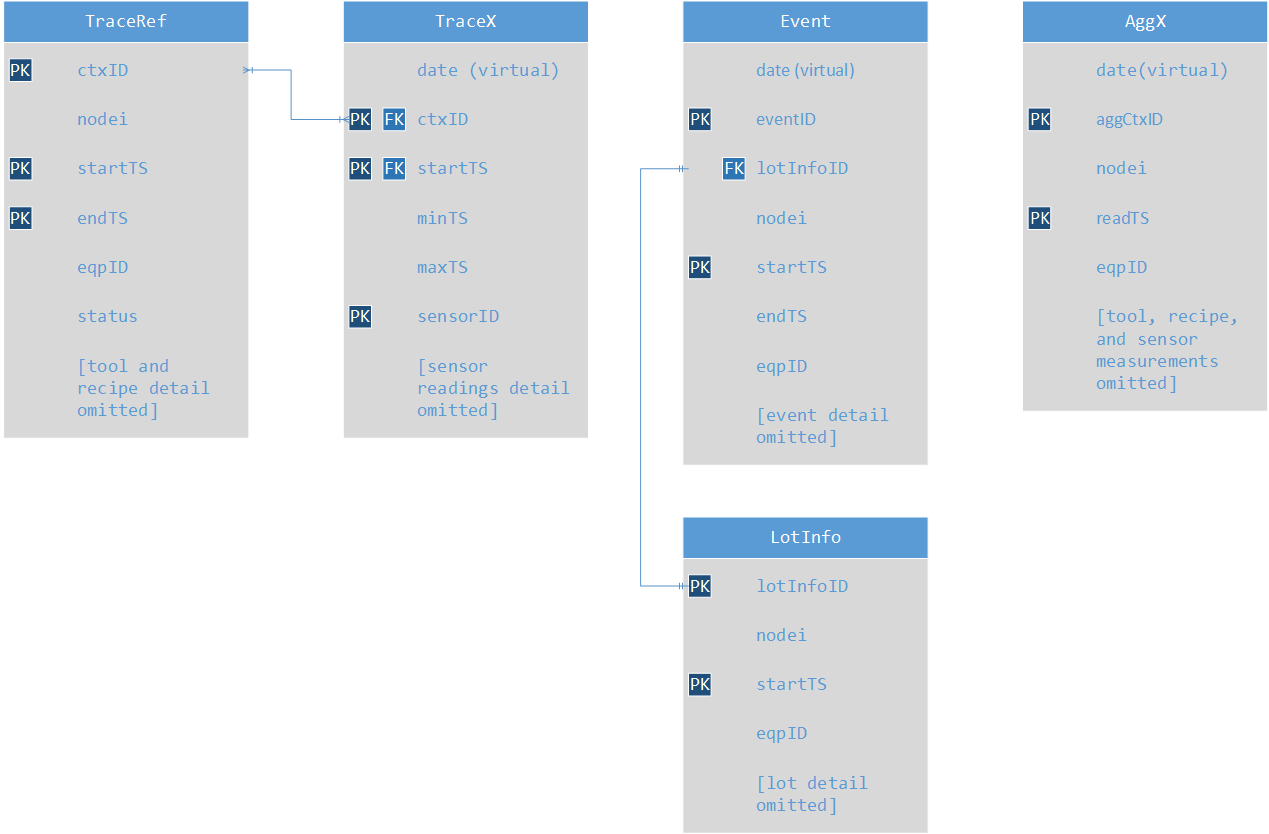

The data-model workload involved multiple tables representing reference or master data, sensor reading, event and aggregation data. This relational model is used in fulfilling streaming analytics and queries spanning real-time and historical data. KX ingests raw data streams, processes and persists data into the following structure. For efficient queries and analytics, KX batches and stores data for similar time ranges together, using one or more sensor or streaming data loaders. The tables and fields used in our configuration are illustrated below.

Test results¶

Reading and writing to disk¶

We used the kdb+ nano I/O benchmark for reading and writing data to a file system backed by block storage. The nano benchmark calculates basic raw I/O capability of non-volatile storage, as measured by kdb+. Note the cache is cleared after each test, unless otherwise specified.

Bypassing page cache

With most block storage devices, data is read into page cache to be used by the application. However, reads and writes to Intel Optane persistent memory configured as block storage bypass page cache (in DRAM).

This improves overall system performances and lowers demand on the Linux kernel for moving data in/out of page cache and for overall memory management.

Read performance (Intel Optane persistent memory as block device vs NVMe storage):

- 2× to 9× faster reading data from 36 different files in parallel

- Comparable to retrieving data from page cache (near DRAM performance)

- 41× better for reading a file in a single thread.

Write performance:

- 42% slower than NVMe devices, due to striping only across 6 DIMM devices vs 24 NVME drives

- Similar single-threaded write performance across the two configurations

| before | after | comparison4 | ||||

|---|---|---|---|---|---|---|

| NVMe | PMem | PMem vs NVMe | ||||

| Threads | 1 | 36 | 1 | 36 | 1 | 36 |

| Total Write Rate (sync) | 1,256 | 5,112 | 1,137 | 2,952 | 0.91 | 0.58 |

| Total create list rate | 3,297 | 40,284 | 4,059 | 30,240 | 1.23 | 0.75 |

| Streaming Read (mapped) | 1,501 | 12,702 | 61,670 | 118,502 | 41.08 | 9.33 |

| Walking List Rate | 2,139 | 9,269 | 3,557 | 28,657 | 1.66 | 3.09 |

| Streaming ReRead (mapped) Rate (from DRAM for NVMe) | 35,434 | 499,842 | 101,415 | 479,194 | 2.86 | 0.96 |

| random1m | 828 | 12,050 | 1,762 | 24,700 | 2.13 | 2.05 |

| random64k | 627 | 8,631 | 1,905 | 36,970 | 3.04 | 4.28 |

| random1mu | 607 | 10,216 | 1,099 | 14,679 | 1.81 | 1.44 |

| random64ku | 489 | 6,618 | 1,065 | 8,786 | 2.18 | 1.33 |

Query performance¶

We tested query performance by targeting data that would be cached in DRAM, on Intel Optane PMem, and NVMe drives, with parallel execution of each query using multiple threads where possible.

Each query involved retrieving trace data with a range of parameters including equipment, chamber, lot, process plan, recipe, sequence, part, sensor, time range, columns of data requested. The parameters were randomized for time range of 10 minutes.

Query response times using Intel Optane persistent memory were comparable to DRAM and 3.8× to 12× faster than NVMe.

| QUERY PROCESSES | COMPARISONS | ||||

|---|---|---|---|---|---|

| DRAM RDB 2 | PMem IDB 8 | NVMe HDB 8 | PMem vs DRAM4 | PMem vs NVMe | |

| 1 query at a time | |||||

| Mean response time (ms) | 23 | 26 | 319 | 1.17 | 12.10 |

| Mean payload size (KB) | 778 | 778 | 668 | 1 | 1 |

| 100 queries at a time | |||||

| Mean response time (ms) | 100 | 82 | 310 | 0.82 | 3.77 |

| Mean payload size (KB) | 440 | 440 | 525 | 1 | 1 |

Two real-time database query processes were configured matching typical configurations, with each process maintaining a copy of the recent data in DRAM. (Additional real-time processes could be added to improve performance with higher query volumes at the cost of additional DRAM.)

Data-processing performance¶

KX Streaming Analytics enables organizations to develop and execute data and storage I/O intensive processes. We compare the performance of a mix of PMem with NVMe storage to NVMe-only storage configuration when reading significant volume of data from the intraday database and persisting it to the historical database on NVME storage.

By reading data from PMem and writing to NVMe-backed storage, Optane cut data processing time by 1.67× and reduced the RAM required by 37%.

| before | after | ||

|---|---|---|---|

| NVMe only, no PMem | PMem & NVMe | PMem vs NVMe only4 | |

| Data processed (GB) | 2,200 | 3,140 | 1.43 |

| Processing Time (minutes) | 24.97 | 21.40 | 0.86 |

| Processing time GB/s | 1.47 | 2.45 | 1.67 |

| Max DRAM Utilisation5 | 56% | 35% | 0.63 |

Summary results¶

Analytics¶

- Performed within 10% of DRAM for queries involving table joins

- Performed 4× to 12× faster than 24 NVMe storage in RAID configuration

- DRAM performed 3× to 10× faster when performing single-threaded calculations and aggregations on data

Data processing and I/O operations¶

- Processed 1.6× more data per second than NVMe-only storage where data was read from PMem and written to NVMe storage

- 2× to 10× faster reading data from files in parallel

- Seed of reading data similar to page cache (DRAM)

- Single-threaded file-write performance within 10% in both configurations

- Multithreaded file-write performance 42% slower

Infrastructure resources¶

- Required 37% less RAM to complete key I/O-intensive data processing

- Required no page cache for querying or retrieving data stored in PMem

Business benefits¶

- Collect and process more data with higher velocity sensors and assets

- Accelerate analytics and queries on recent data by 4× to 12×

- Reduce cost of infrastructure running with less servers and DRAM to support data processing and analytic workloads

- Align infrastructure more closely to the value of data by establishing a storage tier between DRAM and NVMe- or SSD-backed performance block storage

Organizations should consider Intel Optane persistent memory where there is a need to accelerate analytic performance beyond what is available with NVMe or SSD storage.

Notes¶

-

We used software RAID from RAIDIX to deliver lower latency and higher throughput for reads and writes over and above VROC and MDRAID.

KX Streaming Analytics platform raises its performance with RAIDIX era

-

Intel Optane persistent memory configured in App Direct Mode as EXT4 volume single-block device.

-

In our testing we found EXT4 performed significantly better than XFS, with EXT4 performing 1.5× to 12× better than XFS

-

Higher is better. Factor of 1 = same performance. Factor of 2 = 200% faster than comparator.

-

Maximum DRAM utilization as measured by the operating system during the process and is primarily a function of amount of data that needed to be maintained in RAM for query access. The faster the completion of the process the less RAM that is required on the system.